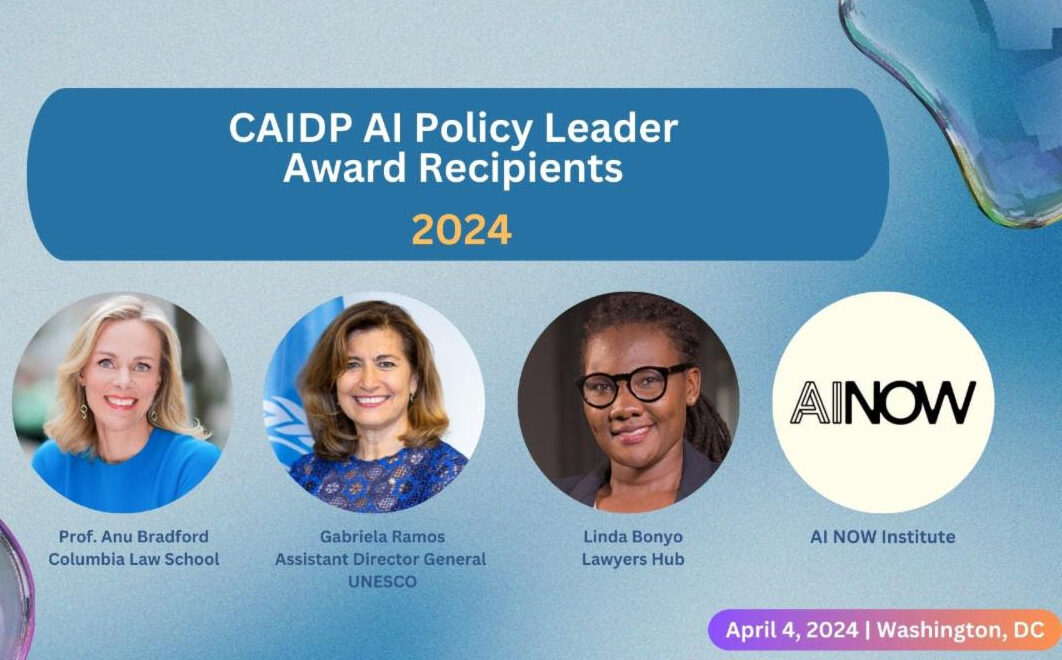

AI Now Institute Co-Directors Amba Kak and Sarah Myers West delivered the following remarks at CAIDP’s 2024 AI Policy Awards, where the Institute received the 2024 AI Policy Leader Award:

We are so grateful for this recognition – especially from peers.

AI Now is an organization that was set up in 2016, following a set of symposia with the Obama White House addressing the – then already burgeoning – social and political impacts of AI. But we took the reins of the organization in January 2023, when we had just left the FTC. We were working on Big Tech cases (several of which have seen their way into the world!) and the Agency’s evolving strategy for emerging tech. We left this work with a clear-eyed conviction that concentration of power was the key diagnosis that should drive and connect our advocacy to address AI harms. Losing sight of the political economy meant that industry could easily weaken or subvert the trajectory and that we were pushing the same ball up the same hill.

We are and remain firm believers in the generative power of critique. But if the first phase of AI Now focused on rigorous diagnosis, it was clear to us that in this next phase that we needed to drive toward action, and that’s been the task we’ve focused on in the past year. We can’t be asking questions that we already know the answer to, or getting trapped in a cycle of having to bring forth “new evidence” while those that often fight accountability bring nothing less than larger than life narratives backed by tremendous amounts of capital.

We’re emerging from a unique moment in the history of AI, not because of anything particular to the technology or its harms, but because of the tone and tenor of the hype cycle that surrounds it. The business model animating this technology is contiguous with, not a disruption to, the familiar trajectory of unbridled surveillance and consolidation that characterized the last decade: we need to learn our lessons and respond assertively through regulation, through public advocacy, and through organizing.

We brought that tone and urgency to our landscape report in April, framing it as a broad-ranging manifesto intended to provide directional focus and a bold vision for AI policy. The goal was to thread together silos of substance and networks of people: providing a strategic roadmap for policy coalitions across labor, civil rights/equity, privacy, and competition. There’s much more to do to confront the significant levels of concentration in the industry: the economic and political power that AI firms have amassed exceeds that of some nations, and the firms are behaving accordingly. Many civil society actors, journalists, and worker groups in this space have been tirelessly pushing for our regulatory authorities to step up and curb the excesses of corporate power, and we’ll continue to join them in this effort.

Looking ahead, we need to be starting from the questions: why AI in the first place? Why assume that the current trajectory of large scale AI is inevitable? In the context of burgeoning public investments in AI, we’re now asking questions like: Why not prioritize opening the libraries on the weekends, or paying for school lunch? As our friends at the Surveillance Resistance Lab have shown us through their work interrogating New York City’s MyCity platform – these are often very real tradeoffs that we’re making in the name of breezy claims to innovation.

We want to start reorienting the conversation in this way: not confined to reactive responses but asserting a vision of the future we do want, and rather than the overwhelming focus on risks – putting equal scrutiny on alleged benefits and whom they accrue to. This is necessarily a “big tent” conversation – not one to be carried forward within the silos of tech policy, but broken open to include those working at the frontlines of the innumerous sectors in which AI is being pushed amidst a hype cycle: healthcare, education, climate science, creative industries, the military and more.

In fact, just this week we got another sobering reminder through the +972 Magazine investigation on the use of algorithmically mediated warfare techniques that provide further cover for the indiscriminate killing of Palestinians in Gaza. AI policy cannot take place in a technocratic bubble divorced from the contexts in which it is actively being weaponized – those working on AI policy will need to confront what AI is actually used for and by whom.

Thanks again to Merve and Marc and the CAIDP team for bringing the community together in this way that allows us to celebrate and also reflect on the work we do.