Related Publications [2]

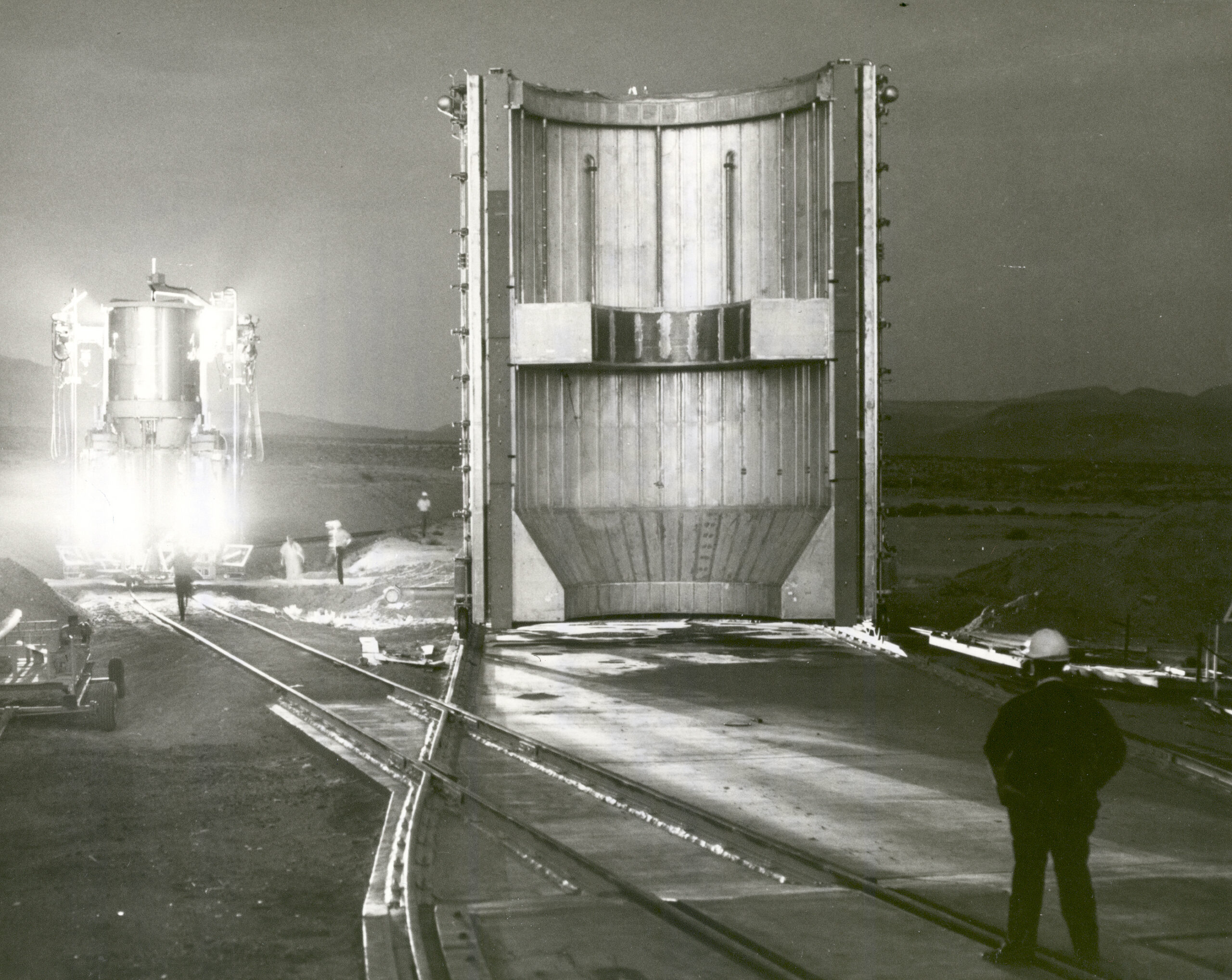

Fission for Algorithms: The Undermining of Nuclear Regulation in Service of AI

Nov 11, 2025

New Report on the National Security Risks from Weakened AI Safety Frameworks

Apr 21, 2025

Related Press [15]

Atoms for Algorithms:’ The Trump Administration’s Top Nuclear Scientists Think AI Can Replace Humans in Power Plants

404 Media

Dec 4, 2025

Power Companies Are Using AI To Build Nuclear Power Plants

Both Guerra and Khlaaf are proponents of nuclear energy, but worry that the proliferation of LLMs, the fast tracking of nuclear licenses, and the AI-driven push to build more plants is dangerous. “Nuclear energy is safe. It is safe, as we use it. But it’s safe because we make it safe and it’s safe because we spend a lot of time doing the licensing and we spend a lot of time learning from the things that go wrong and understanding where it went wrong and we try to address it next time,” Guerra said.

404 Media

Nov 14, 2025

The Destruction in Gaza Is What the Future of AI Warfare Looks Like

“AI systems, and generative AI models in particular, are notoriously flawed with high error rates for any application that requires precision, accuracy, and safety-criticality,” Dr. Heidy Khlaaf, chief AI scientist at the AI Now Institute, told Gizmodo. “AI outputs are not facts; they’re predictions. The stakes are higher in the case of military activity, as you’re now dealing with lethal targeting that impacts the life and death of individuals.”

Gizmodo

Oct 31, 2025

ChatGPT safety systems can be bypassed to get weapons instructions

“That OpenAI’s guardrails are so easily tricked illustrates why it’s particularly important to have robust pre-deployment testing of AI models before they cause substantial harm to the public,” said Sarah Meyers West, a co-executive director at AI Now, a nonprofit group that advocates for responsible and ethical AI usage.

NBC News

Oct 31, 2025

Anthropic Has a Plan to Keep Its AI From Building a Nuclear Weapon. Will It Work?

For Heidy Khlaaf, the chief AI scientist at the AI Now Institute with a background in nuclear safety, Anthropic’s promise that Claude won’t help someone build a nuke is both a magic trick and security theater. She says that a large language model like Claude is only as good as its training data. And if Claude never had access to nuclear secrets to begin with, then the classifier is moot.

Oct 20, 2025

How AI safety took a backseat to military money

AI firms are now working with weapons makers and the military. Safety expert Heidy Khlaaf breaks down what that means.

The Verge

Sep 25, 2025

She’s investigating the safety and security of AI weapons systems.

Besides being error-prone, there’s another big problem with large language models in these kinds of situations: They’re vulnerable to being compromised, which could allow adversaries to hijack systems and impact military decisions. Despite these known issues, militaries all over the world are increasingly using AI—an alarming reality that now drives the work of pioneering AI safety researcher Heidy Khlaaf.

MIT Technology Review

Sep 8, 2025

Experts worry about transparency, unforeseen risks as DOD forges ahead with new frontier AI projects

“We’ve particularly warned before that commercial models pose a much more significant safety and security threat than military purpose-built models, and instead this announcement has disregarded these known risks and boasts about commercial use as an accelerator for AI, which is indicative of how these systems have clearly not been appropriately assessed,” Khlaaf explained.

DefenseScoop

Aug 4, 2025

The A.I. Cold War

"The push to integrate A.I. products everywhere grants A.I. companies power that goes beyond financial incentives, enabling them to concentrate power in a way we've never seen before," Dr. Heidy Khlaaf, chief A.I. scientist at the A.I. Now Institute, told me.

Puck News

Jul 29, 2025

How the White House AI plan helps, and hurts, in the race against China

Sarah Myers West, co-executive director of the AI Now Institute, told Defense One that the new action plan “amounts to a workaround” of that failed provision. “The action plan, at its highest level, reads just like a wish list from Silicon Valley,” she said.

Defense One

Jul 23, 2025

Musk’s xAI was a late addition to the Pentagon’s set of $200 million AI contracts, former defense employee says

The Pentagon’s use of commercial LLMs has drawn some criticism, in part because AI models are generally trained on enormous sets of data that may include personal information on the open web. Mixing that information with military applications is too risky, said Sarah Myers West, a co-executive director of the AI Now Institute, a research organization.

NBC News

Jul 22, 2025

Big Tech enters the war business: How Silicon Valley is becoming militarized

“We argue that this is simply a cover for these companies to concentrate even more power and funding,” says Heidy Khlaaf, chief AI scientist at the AI Now Institute, a research center focused on the societal consequences of AI. Presenting themselves as protagonists of a quasi-civilizational crusade protects tech companies from “regulatory friction,” branding any call for accountability as “a detriment to national interests.” And it allows them to position themselves “not only as too big, but also as too strategically important to fail,” reads a recent AI Now Institute report.

El País

Jul 21, 2025

What Open AI Doesn’t Want You to Know

AI companies are spending millions to get the laws they want. They're not trying to cure cancer, or save America. These companies want to make $100 billion overnight, and they're willing to sponsor dangerous laws to make it happen.

More Perfect Union

Jul 2, 2025

Big AI isn’t just lobbying Washington—it’s joining it

On Tuesday, the AI Now Institute, a research and advocacy nonprofit that studies the social implications of AI, released a report that accused AI companies of “pushing out shiny objects to detract from the business reality while they desperately try to derisk their portfolios through government subsidies and steady public-sector (often carceral or military) contracts.” The organization says the public needs “to reckon with the ways in which today’s AI isn’t just being used by us, it’s being used on us.”

Fortune

Jun 6, 2025

DeepMind’s 145-page paper on AGI safety may not convince skeptics

Heidy Khlaaf, chief AI scientist at the nonprofit AI Now Institute, told TechCrunch that she thinks the concept of AGI is too ill-defined to be “rigorously evaluated scientifically.

TechCrunch

Apr 2, 2025