In the din of heated public debates about artificial intelligence, from speculative doomsday predictions to starry-eyed visions of an AI utopia, people on both sides of the argument tend to position society as an object of this “inevitable” transformation: We are all passive witnesses to this onward march of technology, which we endow with almost divine agency.

But as we’ve argued carefully throughout this report, this is a fight about power, not progress. We need not reconcile ourselves to passive alarm, nor engage in heedless celebration of speculative futures: Instead, the path ahead requires reclaiming agency over the trajectory for AI. In order to build a movement toward a just and democratic society, we must contest how AI is used to avert it. Only when we do so can we seed a new path defined by autonomy, dignity, respect, and justice for all of us.

Here, we offer a high-level road map of strategic levers and case studies to illuminate the opportunity before us. Some of these speak more directly to advocacy campaigns and narrative strategy; others offer regulatory directions for reining in and rebalancing unaccountable power and rebalancing power:

- Target how the AI industry works against the interests of everyday people

- Advance worker organizing to protect the public and our institutions from AI-enabled capture

- Enact a “zero-trust” policy agenda for AI

- Bridge networks of expertise, policy, and narrative to strengthen AI advocacy

- Reclaim a positive agenda for public-centered innovation without AI at the center

Let’s examine each of these in turn.

Target How the AI Industry Works Against the Interests of Everyday People

After decades of mounting inequity, the foundations of a good life have been priced out of reach for most people to such an extent that, in the aftermath of the 2024 election, there’s growing consensus across the ideological spectrum that focusing on the material conditions and economic interests of working people is key to building political power.1See Elizabeth Wilkins, “Restoring Economic Democracy: Progressive Ideas for Stability and Prosperity,” Roosevelt Institute, April 29, 2025, (<)a href='https://rooseveltinstitute.org/publications/restoring-economic-democracy/'(>)https://rooseveltinstitute.org/publications/restoring-economic-democracy(<)/a(>); Ezra Klein, “Would Bernie Have Won?” (<)em(>)Ezra Klein Show,(<)/em(>) November 26, 2024, (<)a href='https://www.nytimes.com/2024/11/26/opinion/ezra-klein-podcast-faiz-shakir.html'(>)https://www.nytimes.com/2024/11/26/opinion/ezra-klein-podcast-faiz-shakir.html(<)/a(>); Pippa Norris et al., “Trump, Brexit, and the Rise of Populism: Economic Have-Nots and Cultural Backlash,” unpublished research project, HKS Faculty Research Working Paper Series RWP16-026, Harvard Kennedy School, 2016; and George Hawley, “The Political Economy of Right-Wing Populism in the United States,” (<)em(>)Promarket(<)/em(>), May 24, 2024, (<)a href='https://www.promarket.org/author/george_hawley/'(>)https://www.promarket.org/author/george_hawley(<)/a(>). While the Trump campaign was successful in moving a pro-working-class message, this might in fact be the administration’s core vulnerability2Patrick Wintour, “Did Trump’s Tariffs Kill Economic Populism?” (<)em(>)Guardian(<)/em(>), April 12, 2025, (<)a href='https://www.theguardian.com/world/2025/apr/12/did-trump-tariffs-economic-populism-globalisation'(>)https://www.theguardian.com/world/2025/apr/12/did-trump-tariffs-economic-populism-globalisation(<)/a(>); Alexander R. Ross, “Steve Bannon and Elon Musk Are Battling for the Soul of Trumpism,” (<)em(>)New Lines Magazine(<)/em(>), April 15, 2025, (<)a href='https://newlinesmag.com/argument/steve-bannon-and-elon-musk-are-battling-for-the-soul-of-trumpism/'(>)https://newlinesmag.com/argument/steve-bannon-and-elon-musk-are-battling-for-the-soul-of-trumpism(<)/a(>)., given that much of the policy orientation of the administration so far has been successfully commanding loyalty from tech and financial elites. There is momentum and urgency around finding ways to drive a wedge through this hypocrisy. The road map we lay out in this section explores ways to ensure that the AI fight revolves around the tech industry’s amassing of power that profoundly affects the day-to-day lives of people—not around progress.

That the AI industry is fundamentally incentivized to hurt the interests of working people and families appears not to be widely understood yet—partly because the most high-profile narratives around AI risk relate to issues of technically framed bias or existential risks, which are often disconnected from people’s material realities. And the fact that AI expertise largely circulates in elite and urban corridors only exacerbates the problem. As we’ve shown throughout this report, investment and interest in AI is not about supporting progress for the many, but about hoarding power for the few, and doing so by any means available: asymmetric control over information; gatekeeping of infrastructure; hollowing out agency for citizens, workers, and consumers; or supporting political allies, financially or otherwise, who side with Big Tech.

For those of us whose research and advocacy is centered around AI, this means that we need not only to make AI-related issues more relevant to movements fighting for economic populism and against tech oligarchy; we also need to better target the AI industry as a key actor working against the interests of the working public. The writing is on the wall: We need to prioritize policy issues that are rooted in people’s lived experiences with AI, particularly those that hit at their most urgent material conditions. This involves giving the broader public a way to “see” AI systems as the invisible infrastructures that mediate their lives—often for the worse—and helping them connect the dots between the harms of AI and the unchecked power of Big Tech. Fortunately, there are existing windows of opportunity for doing just this:

1. DOGE Pushback

The evisceration of government agencies by DOGE brings home the harms that tech oligarchs can enact on the public. This makes it an important front for resistance to AI. There is widespread discontent with the workings of DOGE teams even among those who advocate for greater government efficiency;3Ezra Klein, “In This House, We’re Angry When Government Fails,” (<)em(>)Ezra Klein Show(<)/em(>), November 22, 2024, (<)a href='https://www.nytimes.com/2024/11/22/opinion/ezra-klein-podcast-jennifer-pahlka-steven-teles.html'(>)https://www.nytimes.com/2024/11/22/opinion/ezra-klein-podcast-jennifer-pahlka-steven-teles.html(<)/a(>). and reporting thoroughly documents the failure of DOGE to achieve its stated goals.4 David A. Fahrenthold and Jeremy Singer-Vine, “DOGE is Far Short of Its Goal, and Still Overstating Its Progress, (<)em(>)New York Times(<)/em(>), April 13, 2025, (<)a href='https://www.nytimes.com/2025/04/13/us/politics/doge-contracts-savings.html?smid=nytcore-ios-share&referringSource=articleShare'(>)https://www.nytimes.com/2025/04/13/us/politics/doge-contracts-savings.html?smid=nytcore-ios-share&referringSource=articleShare(<)/a(>). While DOGE officials claim that “AI” can be used to identify budget cuts, detect fraud,5Kate Conger, Ryan Mac, and Madeleine Ngo, “Musk Allies Discuss Deploying A.I. to Find Budget Savings,” (<)em(>)New York Times(<)/em(>), February 3, 2025, (<)a href='https://www.nytimes.com/2025/02/03/technology/musk-allies-ai-government.html'(>)https://www.nytimes.com/2025/02/03/technology/musk-allies-ai-government.html(<)/a(>). automate government tasks,6Makena Kelly and Zoe Schiffer, “DOGE Has Deployed Its GSAi Custom Chatbot for 1,500 Federal Workers,” (<)em(>)Wired(<)/em(>), March 7, 2025, (<)a href='https://www.wired.com/story/gsai-chatbot-1500-federal-workers/'(>)https://www.wired.com/story/gsai-chatbot-1500-federal-workers(<)/a(>). and determine whether someone’s job is “mission critical,”7Courtney Kube et al., “DOGE Will Use AI to Assess the Responses of Federal Workers Who Were Told to Justify Their Jobs via Email,” NBC News, February 25, 2025, (<)a href='https://www.nbcnews.com/politics/doge/federal-workers-agencies-push-back-elon-musks-email-ultimatum-rcna193439'(>)https://www.nbcnews.com/politics/doge/federal-workers-agencies-push-back-elon-musks-email-ultimatum-rcna193439(<)/a(>). reporting has dismantled over and over again that both these teams and the technology they are using are not up to the task.8Aatish Bhatia et al., “DOGE’s Only Public Ledger is Riddled with Mistakes,” (<)em(>)New York Times(<)/em(>), February 28, 2025, (<)a href='https://www.nytimes.com/2025/02/21/upshot/doge-musk-trump-errors.html'(>)https://www.nytimes.com/2025/02/21/upshot/doge-musk-trump-errors.html(<)/a(>); Fahrenthold and Singer-Vine, “DOGE Far Short of Its Goal.” And while it is still unclear exactly what DOGE employees are doing with the data they’ve gained access to, it is very clear that much of this data is highly lucrative and could be used to further Musk’s business interests—including where it is stored, and whether it is by being used to train AI models for Musk’s own companies.9Donald S. Beyer Jr. et al., to Russell Vought, February 12, 2025, (<)a href='https://beyer.house.gov/uploadedfiles/letter_from_congress_to_omb_director_on_restoring_public_access_to_federal_data.pdf'(>)https://beyer.house.gov/uploadedfiles/letter_from_congress_to_omb_director_on_restoring_public_access_to_federal_data.pdf(<)/a(>).

The outrage over DOGE offers an entry point not only to draw attention to the harm government agencies are creating with their use of AI, but also to make clear that DOGE’s work has never truly been about making our services more “efficient”—the goal has always been to dismantle government services and centralize power (see Chapter 3).10Robert Weissman, “DOGE Delusions, A Real-World Plan to Crack Down on Corporate Handouts, Tax the Rich and Invest for the Future,” Public Citizen, January 15, 2025, (<)a href='https://www.citizen.org/article/doge-delusions/'(>)https://www.citizen.org/article/doge-delusions(<)/a(>). Building campaigns that connect the pushback against DOGE to the broader pattern of hollowing out social services in the name of AI-enabled “efficiency”’—from child services, to provision of housing, to access to healthcare—with partners like American Federal Government Employees, Federal Unionists Network, and Federal Workers Against DOGE is a crucial starting point. Many of these partners have already made significant in-roads in helping the public understand and mobilize around DOGE’s harm to workers; broadening the discussion to include how Musk is using AI to help the Trump Administration enact an austerity agenda that impoverishes and disenfranchises the working class, communities of color, the disabled, and those in rural communities could move the needle even further.11Kevin De Liban, (<)em(>)Inescapable AI(<)/em(>), TechTonic Justice, November 2024, (<)a href='https://www.techtonicjustice.org/reports/inescapable-ai'(>)https://www.techtonicjustice.org/reports/inescapable-ai(<)/a(>).

2. Data Centers

The unchecked growth of AI infrastructure is proof of how incredibly successful Big Tech has been at making the case that AI—especially large-scale AI—is worthy of exceptional support and investment from public actors. But the reasons why large-scale AI is a worthwhile use of resources, taxpayer dollars, and land are all based on potential economic growth, the projections of which are usually based on unfounded assumptions (See Chapter 2: Heads I Win, Tails You Lose). Meanwhile, the harms are definitive and documented, such as those evidenced by Meta and Blackstone’s data centers in rural Georgia12“I Live 400 Yards From Mark Zuckerberg’s Massive Data Center,” More Perfect Union, March 27, 2025, YouTube video, (<)a href='https://www.youtube.com/watch?v=DGjj7wDYaiI&ab_channel=MorePerfectUnion'(>)https://www.youtube.com/watch?v=DGjj7wDYaiI&ab_channel=MorePerfectUnion(<)/a(>). (see Chapter 1.2: Too Big To Fail and Chapter 1.3: Arms Race 2.0).13OpenAI, “OpenAI’s Infrastructure Blueprint for the US,” November 13, 2024, (<)a href='https://media.datacenterdynamics.com/media/documents/OpenAI_Blueprint-DCD.pdf'(>)https://media.datacenterdynamics.com/media/documents/OpenAI_Blueprint-DCD.pdf(<)/a(>); Abeba Birhane et al., “The Forgotten Margins of AI Ethics,” in (<)em(>)2022 ACM Conference on Fairness, Accountability, and Transparency(<)/em(>) (2022): 948–958, (<)a href='https://dl.acm.org/doi/10.1145/3531146.3533157'(>)https://dl.acm.org/doi/10.1145/3531146.3533157(<)/a(>); and Abeba Birhane, “AI: Potential Benefits, Proven Risks, Abeba Birhane,” Better Ways (Agile Greece Summit), March 12, 2025, YouTube video, (<)a href='https://www.youtube.com/watch?v=P-p_n4XU0Y8&ab_channel=BetterWays%28AgileGreeceSummit%29'(>)https://www.youtube.com/watch?v=P-p_n4XU0Y8&ab_channel=BetterWays%28AgileGreeceSummit%29(<)/a(>).

The ground is ripe for local mobilization around the material impacts of the AI infrastructure build-out—not only by building, organizing, and campaigning focused on the tech sector but also by forming alliances with the environmental justice movement, which has long and deep experience fighting against policies that result in a physical degradation of natural resources, as well as movements for utility reform and racial justice. Data centers have direct environmental and community health impacts, including (but not limited to) increased greenhouse gas emissions, increased rates of pollution, and the expansion of gas infrastructure and coal plants.14Lois Parshley, “The Hidden Environmental Impact of AI,” (<)em(>)Jacobin(<)/em(>), June 20, 2024, (<)a href='https://jacobin.com/2024/06/ai-data-center-energy-usage-environment'(>)https://jacobin.com/2024/06/ai-data-center-energy-usage-environment(<)/a(>); Stand.earth, “A Growing Climate Concern Around Microsoft’s Expanding Data Center Operations,” December 5, 2024, (<)a href='https://stand.earth/insights/a-growing-climate-concern-around-microsofts-expanding-data-center-operations'(>)https://stand.earth/insights/a-growing-climate-concern-around-microsofts-expanding-data-center-operations(<)/a(>); Yusuf Sar, “The Silent Burden of AI: Unveiling the Hidden Environmental Costs of Data Centers by 2030,” (<)em(>)Forbes(<)/em(>), August 16, 2024, (<)a href='https://www.forbes.com/councils/forbestechcouncil/2024/08/16/the-silent-burden-of-ai-unveiling-the-hidden-environmental-costs-of-data-centers-by-2030'(>)https://www.forbes.com/councils/forbestechcouncil/2024/08/16/the-silent-burden-of-ai-unveiling-the-hidden-environmental-costs-of-data-centers-by-2030(<)/a(>). They also frequently draw on public aquifers for access to the water supply needed to cool their server racks.15Nikitha Sattiraju, “The Secret Cost of Google’s Data Centers: Billions of Gallons of Water to Cool Servers,” (<)em(>)Time(<)/em(>), April 2, 2020, (<)a href='https://time.com/5814276/google-data-centers-water'(>)https://time.com/5814276/google-data-centers-water(<)/a(>); Christopher Tozzi, “How the Groundwater Crisis May Impact Data Centers,” Data Center Knowledge, March 12, 2024, (<)a href='https://www.datacenterknowledge.com/data-center-site-selection/how-the-groundwater-crisis-may-impact-data-centers'(>)https://www.datacenterknowledge.com/data-center-site-selection/how-the-groundwater-crisis-may-impact-data-centers.(<)/a(>) This is particularly concerning given plans to expand data center construction in already water-strained areas of the southwest.16Ireland is another extreme example of this problem. See Heidi Vella, “Ireland’s Data Centre Nightmare – and What Others Can Learn From It,” (<)em(>)Tech Monitor(<)/em(>), February 18, 2025, (<)a href='https://www.techmonitor.ai/hardware/data-centres/irelands-data-centre-nightmare'(>)https://www.techmonitor.ai/hardware/data-centres/irelands-data-centre-nightmare.(<)/a(>) In the Netherlands17“Dutch Call a Halt to New Massive Data Centres, While Rules are Worked Out,” (<)em(>)DutchNews(<)/em(>), February 17. 2022, (<)a href='https://www.dutchnews.nl/2022/02/dutch-call-a-halt-to-new-massive-data-centres-while-rules-are-worked-out'(>)https://www.dutchnews.nl/2022/02/dutch-call-a-halt-to-new-massive-data-centres-while-rules-are-worked-out(<)/a(>); Mark Ballard, “Hyperscale Data Centers Under Fire in Holland,” Data Center Knowledge, February 7, 2022, (<)a href='https://www.datacenterknowledge.com/hyperscalers/hyperscale-data-centers-under-fire-in-holland'(>)https://www.datacenterknowledge.com/hyperscalers/hyperscale-data-centers-under-fire-in-holland.(<)/a(>) and Chile,18“Google Says It Will Rethink Its Plans for a Big Data Center in Chile Over Water Worries,” Associated Press, September 17, 2024, (<)a href='https://apnews.com/article/chile-google-data-center-water-drought-environment-d1c6a7a8e8e6e45257ac84fb750b2162'(>)https://apnews.com/article/chile-google-data-center-water-drought-environment-d1c6a7a8e8e6e45257ac84fb750b2162(<)/a(>). community-level activism led to a pause on data center construction, which suggests that organizing that targets local government can be an effective lever for curtailing the rollout of data centers. These issues—along with other consequences like higher energy costs and labor abuses across the supply chain—are also bipartisan and hit rural voters and Indigenous communities19Chief Sheldon Sunshine to Premier Smith, January 13, 2024, (<)a href='https://www.sturgeonlake.ca/wp-content/uploads/2020/07/13-01-25-Chief-Sunshine-Open-Letter-Premier-Smith-Re-O-Leary.pdf'(>)https://www.sturgeonlake.ca/wp-content/uploads/2020/07/13-01-25-Chief-Sunshine-Open-Letter-Premier-Smith-Re-O-Leary.pdf(<)/a(>); Julia Simon, “Demand for Minerals Sparks Fear of Mining Abuses on Indigenous Peoples’ Lands,”(<)em(>) (<)/em(>)NPR, January 29, 2024, (<)a href='https://www.npr.org/2024/01/29/1226125617/demand-for-minerals-sparks-fear-of-mining-abuses-on-indigenous-peoples-lands'(>)https://www.npr.org/2024/01/29/1226125617/demand-for-minerals-sparks-fear-of-mining-abuses-on-indigenous-peoples-lands.(<)/a(>) particularly hard, providing other critical opportunities for new organizing.

On the flip side, the firms advocating for data center buildout have little proof that data centers actually provide economic benefits to the communities where they are sited. The evidence that industry actors have provided thus far seems speculative at best, often failing to delineate between job projections for full-time roles tied to data center operations and short-term roles in construction and the service sector.20Sarah O’Connor, “Anatomy of a Jobs Promise,” (<)em(>)Financial Times(<)/em(>), January 21, 2025, (<)a href='https://www.ft.com/content/2f25065d-3eeb-49f6-a5eb-8d22ed4697a5'(>)https://www.ft.com/content/2f25065d-3eeb-49f6-a5eb-8d22ed4697a5(<)/a(>). They also ignore the economic impact that higher energy costs and diverted service can have on local businesses.21Saijel Kishan, “‘It’s a Money Loser’: Tax Breaks for Data Centers Are Under Fire.” Bloomberg, May 9, 2024, (<)a href='https://www.bloomberg.com/news/articles/2024-05-09/ai-boom-has-some-states-rethinking-subsidies-for-data-centers'(>)https://www.bloomberg.com/news/articles/2024-05-09/ai-boom-has-some-states-rethinking-subsidies-for-data-centers(<)/a(>); Kasia Tarczynska, “Will Data Center Job Creation Live Up to Hype? I Have Some Concerns.” Good Jobs First, February 12, 2025, (<)a href='https://goodjobsfirst.org/will-data-center-job-creation-live-up-to-hype-i-have-some-concerns/'(>)https://goodjobsfirst.org/will-data-center-job-creation-live-up-to-hype-i-have-some-concerns(<)/a(>); Lulu Ramadan and Sydney Brownstone, “How a Washington Tax Break for Data Centers Snowballed Into One of the State’s Biggest Corporate Giveaways,” (<)em(>)ProPublica(<)/em(>), August 4, 2024, (<)a href='https://www.propublica.org/article/washington-data-centers-tech-jobs-tax-break'(>)https://www.propublica.org/article/washington-data-centers-tech-jobs-tax-break(<)/a(>); Tom Dotan, “The AI Data-Center Boom Is a Job-Creation Bust.” (<)em(>)Wall Street Journal(<)/em(>), February 25, 2025, (<)a href='https://www.wsj.com/tech/ai-data-center-job-creation-48038b67?mod=hp_lead_pos9'(>)https://www.wsj.com/tech/ai-data-center-job-creation-48038b67(<)/a(>) Meanwhile, state and local communities stand to lose billions of dollars in tax revenue as a result of the packages of tax incentives that companies demand before they will agree to build.22Zach Schiller, “Indefensible Tax Breaks for Data Centers Will Cost Ohio,” Policy Matters Ohio, January 7, 2025, (<)a href='https://policymattersohio.org/research/indefensible-tax-breaks-for-data-centers-will-cost-ohio/'(>)https://policymattersohio.org/research/indefensible-tax-breaks-for-data-centers-will-cost-ohio(<)/a(>); “At $1 Billion, Amazon’s Oregon Subsidy is Largest Known in Company’s History,” Good Jobs First, May 19, 2023, (<)a href='https://goodjobsfirst.org/at-1-billion-amazons-oregon-subsidy-is-largest-known-in-history/'(>)https://goodjobsfirst.org/at-1-billion-amazons-oregon-subsidy-is-largest-known-in-history(<)/a(>). This again provides a unique opportunity for broad-based coalitional organizing, as both free-market groups and progressive economic organizations have criticized the disconnect between large corporate tax breaks and the lack of tangible benefits for communities.23Kishan, “It’s a Money Loser”; Jarrett Skorup, “Michigan Has Authorized $4.7 Billion in Taxpayer-Funded Business Subsidies,” Mackinac Center, January 27, 2025, (<)a href='https://www.mackinac.org/pressroom/2025/michigan-has-authorized-4-7-billion-in-taxpayer-funded-business-subsidies'(>)https://www.mackinac.org/pressroom/2025/michigan-has-authorized-4-7-billion-in-taxpayer-funded-business-subsidies(<)/a(>); American Economic Liberties Project, “Tools for Taking on the Corporate Subsidy Machine: Introduction,” September 13, 2022, (<)a href='https://www.economicliberties.us/our-work/subsidy-toolkit-intro/'(>)https://www.economicliberties.us/our-work/subsidy-toolkit-intro(<)/a(>); and Greg LeRoy, (<)em(>)The Great American Jobs Scam: Corporate Tax Dodging and the Myth of Job Creation(<)/em(>) (Berrett Koehler, 2005). Some local organizations have already taken on the mantle: Citizens Action Coalition in Indiana, for example, has called for a moratorium on new hyperscaler data center construction due to the enormous resource constraints and cost burdens that large data centers pose to local communities,24Citizens Action Coalition, “CAC Calls for Data Center Moratorium,” October 15, 2024, (<)a href='https://www.citact.org/news/cac-calls-data-center-moratorium'(>)https://www.citact.org/news/cac-calls-data-center-moratorium(<)/a(>).and Memphis Community Against Pollution in Tennessee has pushed back against the public health and environmental impacts of Elon Musk’s xAI data center buildout near historically Black neighborhoods already facing elevated cancer and asthma risks.25Andrew R. Chow, “Elon Musk’s New AI Data Center Raises Alarms Over Pollution, (<)em(>)Time(<)/em(>), September 17, 2024, (<)a href='https://time.com/7021709/elon-musk-xai-grok-memphis/'(>)https://time.com/7021709/elon-musk-xai-grok-memphis/(<)/a(>).

3. Algorithmic Prices and Wages

Across the economy, from grocery stores to online marketplaces to insurance brokers, the ability of firms to use AI to set prices for customers and calibrate wages for workers using detailed, often intimate, information extracted from us, is deepening inequality. This makes algorithmic prices and wages especially fertile ground for mobilizing around AI’s economic impacts on everyday people.26Veena Dubal, “On Algorithmic Wage Discrimination,”(<)em(>) Columbia Law Review(<)/em(>) 123, no. 7 (2023): 1929–92, (<)a href='https://columbialawreview.org/content/on-algorithmic-wage-discrimination/'(>)https://columbialawreview.org/content/on-algorithmic-wage-discrimination(<)/a(>).

There is growing coordination between labor organizers and anti-monopoly and economic justice groups working to contend with this issue. In February 2025, for example, along with a coalition of organizations and experts, AI Now published a report canvassing the breadth of the use of algorithmic pricing and wage-setting practices and spotlighting the ways in which bright-line rules could prevent harm,27AI Now Institute et al., (<)em(>)AI Now Coauthors Report on Surveillance Prices and Wages(<)/em(>), February 20, 2025, (<)a href='https://ainowinstitute.org/publication/ai-now-coauthors-report-on-surveillance-prices-and-wages'(>)https://ainowinstitute.org/publication/ai-now-coauthors-report-on-surveillance-prices-and-wages(<)/a(>). including an outright ban on individualized surveillance prices and wages, and elimination of loopholes that corporations could exploit to continue that practice.28For example, in cases where states provide narrow exceptions for price or wage discrimination on the basis of surveillance data, businesses must have the burden of clearly establishing that the exception applies. There has already been considerable movement in the states this session to prohibit both algorithmic pricing and wage-setting, including the introduction of bills in California, Colorado, Georgia, and Illinois.29Will Oremus and Lauren Kaori Gurley, “States Eye Bans on ‘Surveillance Pricing’ that Exploits Personal Data,” (<)em(>)Washington Post(<)/em(>), February 20, 2025, (<)a href='https://www.washingtonpost.com/politics/2025/02/20/surveillance-prices-wages-california-ban/'(>)https://www.washingtonpost.com/politics/2025/02/20/surveillance-prices-wages-california-ban(<)/a(>).

Advance Worker Organizing to

Protect the Public and Our Institutions from AI-Enabled Capture

(Guest contribution by Andrea Dehlendorf, Senior Fellow)

Labor campaigns over the past several years have demonstrated that when workers and their unions pay serious attention to how AI is changing the nature of work and engage in serious intervention through collective bargaining, contract enforcement, campaigns, and policy advocacy, they can shape how their employers develop and deploy the technologies. The deeper opportunity for labor, however, lies in the fact that across all sectors of the economy and society, it is people experiencing these technologies at work, firsthand, who best understand their impacts and limitations, and also the conditions under which their potential can be realized. Thus, labor’s power can shape not just whether and how AI is used in the workplace, but can also recalibrate the technology sector’s power overall, ultimately shaping the trajectory of AI toward the public interest and common good.

Over the past decade, labor campaigns to challenge the use of generative AI in Hollywood, algorithmic management of warehouse workers, and platforms for rideshare drivers have been critical to building public awareness of the impact of AI and data technologies in workplaces. In 2023, the Writers Guild of America and Screen Actors Guild strikes challenged studios aiming to deploy generative AI to replace their creative work without fair compensation.30Molly Kinder, “Hollywood Writers Went on Strike to Protect Their Livelihoods from Generative AI. Their Remarkable Victory Matters for All Workers.” Brookings, April 12, 2024, (<)a href='https://www.brookings.edu/articles/hollywood-writers-went-on-strike-to-protect-their-livelihoods-from-generative-ai-their-remarkable-victory-matters-for-all-workers/'(>)https://www.brookings.edu/articles/hollywood-writers-went-on-strike-to-protect-their-livelihoods-from-generative-ai-their-remarkable-victory-matters-for-all-workers(<)/a(>). These public battles about profit-driven automation risk in creative industries have won contract language that provides some protection for artists and writers, transformed the public conversation around the implications of technology, and shifted popular understanding of the stakes.31Jake Coyle, “In Hollywood Writers’ Battle Against AI, Humans Win (For Now),” Associated Press, September 27, 2023, (<)a href='https://apnews.com/article/hollywood-ai-strike-wga-artificial-intelligence-39ab72582c3a15f77510c9c30a45ffc8'(>)https://apnews.com/article/hollywood-ai-strike-wga-artificial-intelligence-39ab72582c3a15f77510c9c30a45ffc8(<)/a(>).

Unions, worker centers, and alt-labor groups have also exposed and challenged Amazon’s algorithmic management practices and extensive surveillance. The Warehouse Worker Resource Center developed and passed a bill in California that limits the use of technology-enabled productivity quotas—legislation that workers’s organizations are now advancing in other states.32Mishal Khan et al., “The Current Landscape of Tech and Work Policy: A Guide to Key Concepts,” UC Berkeley Labor Center, November 12, 2024, (<)a href='https://laborcenter.berkeley.edu/tech-and-work-policy-guide/'(>)https://laborcenter.berkeley.edu/tech-and-work-policy-guide(<)/a(>); Noam Scheiber, “California Governor Signs Bill that Could Push Amazon to Change Labor Practices,” (<)em(>)New York Times(<)/em(>), October 26, 2021, (<)a href='https://www.nytimes.com/2021/09/22/business/newsom-amazon-labor-bill.html'(>)https://www.nytimes.com/2021/09/22/business/newsom-amazon-labor-bill.html(<)/a(>). Uber and Lyft drivers have also organized across a range of formations (Gig Workers Rising,33Gig Workers Rising, “About Us,” accessed April 17, 2025, (<)a href='https://gigworkersrising.org/get-informed/'(>)https://gigworkersrising.org/get-informed(<)/a(>). Los Deliversistas Unidos,34Workers Justice Project, “Los Deliveristas Unidos,” accessed April 17, 2025, (<)a href='https://www.workersjustice.org/en/ldu'(>)https://www.workersjustice.org/en/ldu(<)/a(>). Rideshare Drivers United,35Drivers United, “We’re Uber & Lyft Drivers Uniting for a Fair, Dignified, and Sustainable Rideshare Industry,” accessed April 17, 2025, (<)a href='https://www.drivers-united.org/'(>)https://www.drivers-united.org(<)/a(>). SEIU, and others) to challenge platform precarity, winning the right to organize and establish labor protections. In Boston, drivers won the right to unionize via a state ballot measure.36Eli Tan,”Ride-Hailing Drivers in Massachusetts Win Right to Unionize,” (<)em(>)New York Times(<)/em(>), November 6, 2024, (<)a href='https://www.nytimes.com/2024/11/06/technology/uber-lyft-drivers-unionize-massachusetts.html'(>)https://www.nytimes.com/2024/11/06/technology/uber-lyft-drivers-unionize-massachusetts.html(<)/a(>).

As powerful technology corporations take an unprecedented and self-serving role in the federal government, undermining democratic structures, it is clear that the labor movement has an opportunity to lead on behalf of all of society. Building on the momentum of previous wins, there are five strategic paths for the labor movement to flex its power and increase its influence over the trajectory of AI across our society and economy:

Sectoral Analysis and Action for the Common Good

By developing a comprehensive analysis of how AI and digital technologies are advancing in every economic sector, including the impact on both working conditions and the public interest, workers and their unions can develop and implement comprehensive bargaining, organizing, and policy campaign strategies. These strategies will have maximum impact when they address both the impact on the conditions of work and the impact on the public.

A key example is National Nurses United (NNU), which has pushed back against profit-driven employers seeking to replace skilled professionals with subpar technology that harms patients. In 2024, NNU conducted a survey of its members,37National Nurses United, “National Nurses United Survey Finds A.I. Technology Degrades and Undermines Patient Safety,” May 15, 2024, (<)a href='https://www.nationalnursesunited.org/press/national-nurses-united-survey-finds-ai-technology-undermines-patient-safety'(>)https://www.nationalnursesunited.org/press/national-nurses-united-survey-finds-ai-technology-undermines-patient-safety(<)/a(>). which found that AI undermines nurses’ clinical judgment and threatens patient safety. The group subsequently released a “Nurses’ and Patients’ Bill of Rights,” a set of guiding principles designed to ensure just and safe application of AI in healthcare settings.38National Nurses United, “Nurses and Patients’ Bill of Rights: Guiding Principles for A.I. Justice in Nursing and Health Care,” accessed April 24, 2025, (<)a href='https://www.nationalnursesunited.org/sites/default/files/nnu/documents/0424_NursesPatients-BillOfRights_Principles-AI-Justice_flyer.pdf'(>)https://www.nationalnursesunited.org/sites/default/files/nnu/documents/0424_NursesPatients-BillOfRights_Principles-AI-Justice_flyer.pdf(<)/a(>). NNU affiliates have successfully bargained over AI implementation in contract negotiations; in Los Angeles, nurses secured commitments from University of Southern California hospitals that they won’t replace nurses with AI and that they will center nurses’ judgment when determining patient care, as well as safe and appropriate staffing levels.39Bonnie Castillo, “Humanity is the Heart of Nursing,” National Nurses United, March 29, 2024, (<)a href='https://www.nationalnursesunited.org/article/humanity-is-the-heart-of-nursing'(>)https://www.nationalnursesunited.org/article/humanity-is-the-heart-of-nursing(<)/a(>). At Kaiser Permanente, nurses stopped the rollout of EPIC Acuity, a system that underestimated how sick patients were and how many nurses were needed, and forced Kaiser to establish an oversight committee for the system implementation.40Castillo, “Humanity is the Heart of Nursing.” Beyond the bargaining table, nurses have mobilized their membership out into the streets and to Washington, DC, to protest the hospital industry’s attempts to push scientifically unproven AI instead of investing in safe staffing.

Similar strategies can be designed across most sectors of the economy, providing a critical check on the deployment of AI for cost cutting and profit maximization.

Challenging AI Austerity in the Public Sector

As Elon Musk and others advance AI-driven austerity in the federal government through DOGE—and Republicans and Democrats mount copycat initiatives across the states—workers and their unions can fight back, defending both workers whose jobs are deeply impacted and those receiving public benefits and services. The Federal Unionist Network (FUN), an independent network of local unions representing federal employees, is directly challenging DOGE and its driver, Elon Musk. FUN is running a Save Our Services campaign that centers the impact of the service cuts that Musk and the Trump administration are implementing.41Federal Unionists Network, “Let Us Work! Save Our Services!” accessed April 17, 2025, (<)a href='http://www.federalunionists.net/'(>)http://www.federalunionists.net(<)/a(>). The network is building scalable infrastructure for education, support, and action.

At the state level, Pennsylvania provides a model of regulating AI in the provision of public services, recognizing and protecting the key role state workers play in service and benefit provisions. SEIU Local 668, led by President Steve Catanese, successfully negotiated a partnership with Governor Josh Shapiro establishing a robust co-governance mechanism over AI, including the formation of a worker board that will oversee implementation of generative AI tools; the definition of a public worker as a person and gen AI as a tool, strong human-in-the-loop protections at every step, and monitoring of disparate impacts related to bias and discrimination of protected classes.42Josh Shapiro, “From the Desk of Governor Josh Shapiro,” March 21, 2025, (<)a href='https://www.pa.gov/content/dam/copapwp-pagov/en/governor/documents/2025.3.21%20gov%20shapiro%20letter%20on%20ai%20and%20employees.pdf'(>)https://www.pa.gov/content/dam/copapwp-pagov/en/governor/documents/2025.3.21%20gov%20shapiro%20letter%20on%20ai%20and%20employees.pdf(<)/a(>).

Comprehensive Campaigns

Challenging Big Tech Corporations

Transforming the unprecedented power of the technology sector will require comprehensive campaigns that directly challenge the monopolistic business models and actions of the corporations that dominate and drive the sector, and, increasingly, our government. To meet the challenge, these campaigns must include but not limit themselves to, organizing workers and addressing workplace impacts.

The Athena Coalition, for example, focuses on Amazon’s unaccountable power and impacts across multiple spheres, pushing for regulatory action to break up the business itself.43Athena, “How We Take on the Tech Oligarchy,” February 28, 2025, (<)a href='https://athenaforall.org/news/boycott-alternatives/'(>)https://athenaforall.org/news/boycott-alternatives(<)/a(>). Athena members connect how Amazon develops and uses AI and data surveillance technologies in workplaces to what they sell to police departments, ICE,44#NoTechForIce, “Take Back Tech,” accessed April 17, 2025, (<)a href='https://notechforice.com/'(>)https://notechforice.com(<)/a(>). and authoritarian military states;45No Tech For Apartheid (website), accessed April 17, 2025, (<)a href='https://www.notechforapartheid.com/'(>)https://www.notechforapartheid.com(<)/a(>). they bring public awareness to the impacts of pollution on neighborhoods near Amazon logistic hubs,46“Good Jobs. Clean Air. Justice for Neighbors,” WWRC, accessed April 17, 2025, (<)a href='https://warehouseworkers.org/wwrc_campaigns/building-a-better-san-bernardino/'(>)https://warehouseworkers.org/wwrc_campaigns/building-a-better-san-bernardino(<)/a(>). pressure the company to reduce the climate impact of their business model, and bring together organizers in states to challenge the energy use and environmental impact of their data centers; and they expose how algorithmic systems impact small businesses on the platform, consumers, and workers. Together, coalition members are bringing these strands together in order to reclaim our wealth and power from corporations like Amazon.

Building State and Local Power

to Advance the Public Interest

Workers and their unions can and must collaborate with racial justice, economic justice, and privacy groups to mount a serious, coordinated defense against the tech sector’s aggressive state policy agenda to write the rules of AI regulation and digital labor tech regulation in their interest. Doing so will require deeper engagement with members of unions and community-based organizations to understand and prioritize these interventions. Beyond defense, there is an opportunity to develop a proactive agenda at the state and local level that draws bright lines around the use of surveillance, algorithmic management, and automation technologies in criminal justice, workforce management, and public service delivery; and also addresses the unsustainable climate impacts of data centers and the extreme market concentration of the industry. For example, in California, there is a strong set of labor and public-interest focused policy proposals that can be used as models in other states. Also, engagement is moving with members of unions and aligned privacy, tech justice, racial justice, and economic justice organizations and social justice leaders to understand how their core issues are impacted by AI; develop strategies to organize and publicly campaign on the issues; and identify where they can best engage on state and local policy. The UC Berkeley Labor Center’s Tech and Work Policy Guide is a key resource that can be used across states47Mishal Khan et al., “The Current Landscape of Tech and Work Policy: A Guide to Key Concepts,” UC Berkeley Labor Center, November 12, 2024, (<)a href='https://laborcenter.berkeley.edu/tech-and-work-policy-guide/'(>)https://laborcenter.berkeley.edu/tech-and-work-policy-guide(<)/a(>). and Local Progress’s recent report written in collaboration with AI Now provides an actionable roadmap for local leaders to protect communities from the harms of AI.48Hillary Ronen, Local Leadership in the Era of Artificial Intelligence And the Tech Oligarchy, Local Progress and AI Now Institute, May 2025, (<)a href='https://localprog.org/43FPlS6'(>)https://localprog.org/43FPlS6(<)/a(>).

Organizing Across the AI Value and Supply Chain

A global movement to organize across the AI value and supply chain—from US-based white-collar tech workers to data labelers in the Global South, would provide a powerful check on the technology sector. Such a movement could reorient the trajectory of AI toward the public interest and counter the sector’s authoritarian turn. However, union density in the technology sector, globally and in the US, is negligible. There have been no serious attempts to organize the sector at scale. Existing efforts are often underresourced, while others lack a global orientation and solidarity, limit their focus to economic issues, or are not challenging concentrated market power.

However, several promising initiatives are gaining traction, including Amazon Employees for Climate Justice,49Lauren Rosenblatt, “5 Years into Amazon’s Climate Pledge, Workers Challenge its Progress,” (<)em(>)Seattle Times(<)/em(>), July 10, 2024, (<)a href='https://www.seattletimes.com/business/amazon/5-years-into-amazons-climate-pledge-workers-challenge-its-progress/'(>)https://www.seattletimes.com/business/amazon/5-years-into-amazons-climate-pledge-workers-challenge-its-progress(<)/a(>). which is organizing white-collar workers to challenge Amazon on its climate impact; the African Content Moderators Union (ACMU), other locally led associations,50Mohammad Amir Anwar, “Africa’s Data Workers Are Being Exploited by Foreign Tech Firms – 4 Ways to Protect Them,” (<)em(>)Conversation(<)/em(>), March 31, 2025, (<)a href='https://theconversation.com/africas-data-workers-are-being-exploited-by-foreign-tech-firms-4-ways-to-protect-them-252957'(>)https://theconversation.com/africas-data-workers-are-being-exploited-by-foreign-tech-firms-4-ways-to-protect-them-252957(<)/a(>). and UNI Global,51“African Tech Workers Rising,” UNI Global Union, accessed April 17, 2025, (<)a href='https://uniglobalunion.org/about/sectors/ict-related-services/african-tech-workers-rising/'(>)https://uniglobalunion.org/about/sectors/ict-related-services/african-tech-workers-rising(<)/a(>). which are organizing data labelers in Africa; Solidarity Center52Tula Connell, “Mexico: App-Based Drivers Hail New Platform Law,” Solidarity Center, January 6, 2025, (<)a href='https://www.solidaritycenter.org/mexico-app-based-drivers-hail-new-platform-law-2/'(>)https://www.solidaritycenter.org/mexico-app-based-drivers-hail-new-platform-law-2(<)/a(>). and ITUC,53Nithin Coca, “Long Silenced, Gig Workers in Indonesia are Organizing and Fighting for their Rights,” (<)em(>)Equal Times(<)/em(>), March 14, 2025, (<)a href='https://www.ituc-csi.org/long-silenced-gig-workers-in'(>)https://www.ituc-csi.org/long-silenced-gig-workers-in(<)/a(>). which support global gig-worker organizing; the Data Worker’s Inquiry Project,54“Data Workers’ Inquiry,” accessed April 17, 2025, (<)a href='https://data-workers.org/'(>)https://data-workers.org/(<)/a(>). a community-based research project focusing on data labelers globally; and the Tech Equity Collaborative55“Shining a Light on Tech’s Shadow Workforce,” (<)em(>)TechEquity Contract Worker Disparity Project(<)/em(>), accessed April 17, 2025, (<)a href='https://contractwork.techequitycollaborative.org/'(>)https://contractwork.techequitycollaborative.org/(<)/a(>). and CWA’s Alphabet Workers Union,56“Dec 19, 2024 – CWA Members at Google Ratify Historic First Contract,” (<)em(>)CWA(<)/em(>), December 19, 2024, (<)a href='https://cwa-union.org/news/e-newsletter/2024-12-19'(>)https://cwa-union.org/news/e-newsletter/2024-12-19(<)/a(>). which are campaigning on temp- and contract-worker issues.

We desperately need more ambitious and better-resourced projects. There is a particular opportunity for people who are building and training the AI systems—and, therefore, know them intimately—to use their powerful positions to hold tech firms accountable for how these systems are used. Many people working in these white-collar jobs have conveyed that their values conflict with corporate decision-making.57See, e.g., Laura Newcombe, “After Tesla Employees Signed Letter Asking Elon Musk to Resign, at Least One Has Been Fired,” (<)em(>)Gizmodo(<)/em(>), May 12, 2025, (<)a href='https://gizmodo.com/after-tesla-employees-signed-letter-asking-elon-musk-to-resign-at-least-one-has-been-fired-2000600934'(>)https://gizmodo.com/after-tesla-employees-signed-letter-asking-elon-musk-to-resign-at-least-one-has-been-fired-2000600934(<)/a(>); and Bobby Allyn, “Former Palantir Workers Condemn Company’s Work with Trump Administration,” NPR, May 5, 2025, (<)a href='https://www.npr.org/2025/05/05/nx-s1-5387514/palantir-workers-letter-trump'(>)https://www.npr.org/2025/05/05/nx-s1-5387514/palantir-workers-letter-trump.(<)/a(>) Organizing and taking collective action from these positions will have a deeply consequential impact on the trajectory of AI.

The Path Forward

During previous periods of extreme inequality, such as the Gilded Age and the Roaring Twenties, muckraking journalism, labor organizing, and strikes held unaccountable corporate power accountable, and ushered in the Progressive and New Deal eras. In our era, collective labor actions will also be critical to challenging not only Big Tech’s unchecked wealth and power over our democracy and society, but also the use of technologies to undermine job quality while reducing workers’ share of the profits they generate. Like the labor movement of the last century, today’s labor movement can fight for and win a new social compact that puts AI and digital technologies in service of the public interest and hold today’s unaccountable power accountable.

Enact a “Zero-Trust” Policy Agenda for AI

In 2023, alongside Electronic Privacy Information Center (EPIC) and Accountable Tech,58Accountable Tech et al., (<)em(>)Zero Trust AI Governance(<)/em(>), August 10, 2023, (<)a href='https://ainowinstitute.org/wp-content/uploads/2023/08/Zero-Trust-AI-Governance.pdf'(>)https://ainowinstitute.org/wp-content/uploads/2023/08/Zero-Trust-AI-Governance.pdf(<)/a(>). we recommended three pillars to guide policy action in the AI market:

- Time is of the essence—start by vigorously enforcing existing laws.

- Bold, easily administrable, bright-line rules are necessary.

- At each phase of the AI system life cycle, the burden should be on companies—including end users—to prove that their systems work as claimed, and are not harmful.

“Given the monumental stakes, blind trust in [tech firms’] benevolence is not an option,” we argued then. That warning rings even more starkly now.

1. Build a drumbeat of bright-line rules that restrict

the most harmful AI uses wholesale, or limit firms’

ability to collect and use data in specific ways.

Bold, bright-line rules that restrict harmful AI applications or types of data collection for AI send a clear message that the public determines whether, in what contexts, and how AI systems will be used. Compared to frameworks that rely on process-based safeguards (such as AI auditing or risk-assessment regimes) that have often, in practice, tended to further empower industry leaders and rely on robust regulatory capacity for effective enforcement,59AI Now Institute, “Algorithmic Accountability: Moving Beyond Audits,”in (<)em(>)2023 Landscape: Confronting Tech Power, AI Now Institute(<)/em(>), (<)a href='https://ainowinstitute.org/publications/algorithmic-accountability'(>)https://ainowinstitute.org/publications/algorithmic-accountability(<)/a(>) these bright-line rules have the benefit of being easily administrable and targeting the kind of harms that can’t be prevented or remedied through safeguards alone. There’s a growing list of ripe targets for these kinds of clear prohibitions. For example:60A range of bills of this variety have appeared across state legislatures. See Public Citizen, “Tracker: State Legislation on Intimate Deepfakes,” last updated April 15, 2025, (<)a href='https://www.citizen.org/article/tracker-intimate-deepfakes-state-legislation/'(>)https://www.citizen.org/article/tracker-intimate-deepfakes-state-legislation(<)/a(>); Public Citizen, “Tracker: State Legislation on Deepfakes in Elections,” last Updated April 17, 2025, (<)a href='https://www.citizen.org/article/tracker-legislation-on-deepfakes-in-elections/'(>)https://www.citizen.org/article/tracker-legislation-on-deepfakes-in-elections(<)/a(>); and California Education Code § 87359.2 (2024); H.B. 1709, 89th Leg. (Tex. 2025); H.B. 1709, 89th Leg. (Tex. 2025).

- AI cannot be used for emotion-detection systems

- AI cannot be used for “social scoring,” i.e., scoring or ranking people based on their social behavior or predicted characteristics

- Surveillance data cannot be used to set prices or wages

- AI cannot be used to deny health insurance claims

- Surveillance data about workers cannot be sold to third-party vendors

- AI cannot be used to replace public school teachers

- AI cannot be used to generate sexually explicit deepfake imagery or election-related deepfake imagery

2. Regulate throughout the AI life cycle.

This means no broad-based exemptions for foundation-model providers, application developers, or end users.

AI must be regulated throughout the entire life cycle of development, from how data is collected through the training process, to fine tuning and application development, to deployment. Appropriate actors should be held responsible for decision-making at each of these stages, and be mandated to provide transparency and disclosures that enable actors further downstream to uphold their own obligations.61Cybersecurity & Infrastructure Security Agency, “CISA and UK NCSC Unveil Joint Guidelines for Secure AI System Development,” November 26, 2023, (<)a href='https://www.cisa.gov/news-events/alerts/2023/11/26/cisa-and-uk-ncsc-unveil-joint-guidelines-secure-ai-system-development'(>)https://www.cisa.gov/news-events/alerts/2023/11/26/cisa-and-uk-ncsc-unveil-joint-guidelines-secure-ai-system-development(<)/a(>).

Figure 1: Risk origination and risk proliferation in the foundation models supply chain

Fig 1: Risk Origination and Risk Proliferation in the Foundation Models Supply Chain. Source: Ada Lovelace Institute, 2023

Transparency regimes are the foundation of effective regulation of technology—necessary, but never enough62Chloe Xiant, “OpenAI’s GPT-4 Is Closed Source and Shrouded in Secrecy,” (<)em(>)Vice(<)/em(>), March 16, 2023,(<)a href='https://www.vice.com/en/article/ak3w5a/openais-gpt-4-is-closed-source-and-shrouded-in-secrecy'(>) https://www.vice.com/en/article/ak3w5a/openais-gpt-4-is-closed-source-and-shrouded-in-secrecy(<)/a(>).—and yet in the past few years we’ve seen fierce and largely successful resistance to baseline disclosure requirements from the AI industry. That’s true at the general-purpose stage where industry has used copyright and commercial secrecy as a shield to avoid disclosing training data;63Kyle Wiggers, “Many companies won’t say if they’ll comply with California’s AI training transparency law,” (<)em(>)TechCrunch(<)/em(>), October 4, 2024, (<)a href='https://techcrunch.com/2024/10/04/many-companies-wont-say-if-theyll-comply-with-californias-ai-training-transparency-law'(>)https://techcrunch.com/2024/10/04/many-companies-wont-say-if-theyll-comply-with-californias-ai-training-transparency-law(<)/a(>). but it’s equally true at the final stage, where these systems are deployed. Proactive transparency and disclosure requirements (where the consumer doesn’t need to individually request this information)64Matt Scherer, “FAQ on Colorado’s Consumer Artificial Intelligence Act (SB 24-205),” Center for Democracy and Technology, December 17, 2024, (<)a href='https://cdt.org/insights/faq-on-colorados-consumer-artificial-intelligence-act-sb-24-205/#h-the-law-requires-companies-to-give-some-information-to-consumers-proactively-without-the-consumer-having-to-request-it-why-is-that-proactive-disclosure-necessary'(>)https://cdt.org/insights/faq-on-colorados-consumer-artificial-intelligence-act-sb-24-205/#h-the-law-requires-companies-to-give-some-information-to-consumers-proactively-without-the-consumer-having-to-request-it-why-is-that-proactive-disclosure-necessary(<)/a(>). have prompted intense backlash from industry, most recently by asserting that disclosure provisions in Colorado’s AI law, passed in 2024, placed an “undue burden” on businesses.65Tamara Chuang, “Attempt to Tweak Colorado’s Controversial, First-in-the-Nation Artificial Intelligence Law is Killed,” (<)em(>)Colorado Sun(<)/em(>), May 5, 2025, (<)a href='https://coloradosun.com/2025/05/05/colorado-artificial-intelligence-law-killed/'(>)https://coloradosun.com/2025/05/05/colorado-artificial-intelligence-law-killed(<)/a(>). What this means is that in many contexts where AI is being used, people whom an AI system affects will never be made aware of its role in making determinations that can have significant consequences for their lives—let alone give them the ability to contest the system.

Industry has also exerted a concerted lobbying effort to put all of the onus on end developers, while exempting the developers of so-called “general purpose” systems.66Matt Perault, “Regulate AI Use, Not AI Development,” Andreesen Horowitz, January 27, 2025, (<)a href='https://a16z.com/regulate-ai-use-not-ai-development/'(>)https://a16z.com/regulate-ai-use-not-ai-development(<)/a(>); Jacob Helberg, “11 Elements of American AI Dominance,” (<)em(>)Republic(<)/em(>), July 26, 2024, (<)a href='https://therepublicjournal.com/journal/11-elements-of-american-ai-supremacy/'(>)https://therepublicjournal.com/journal/11-elements-of-american-ai-supremacy(<)/a(>). In 2021, alongside more than fifty global AI experts, AI Now published a letter urging the European Commission to reject this approach in the context of the EU AI Act.67AI Now Institute, “General Purpose AI Poses Serious Risks, Should Not Be Excluded From the EU’s AI Act,” policy brief, April 13, 2023, (<)a href='https://ainowinstitute.org/publications/gpai-is-high-risk-should-not-be-excluded-from-eu-ai-act'(>)https://ainowinstitute.org/publications/gpai-is-high-risk-should-not-be-excluded-from-eu-ai-act(<)/a(>). It’s important that AI be evaluated at every stage of the life cycle. Certain aspects of AI models will be best evaluated at the application stage: This is where pre-deployment testing and validation will give the most insight into the efficacy and safety of particular uses of AI.68Anna Lenhart and Sarah Myers West, (<)em(>)Lessons from the FDA for AI, AI Now Institute(<)/em(>), August 1, 2024, (<)a href='https://ainowinstitute.org/publications/research/lessons-from-the-fda-for-ai'(>)https://ainowinstitute.org/publications/research/lessons-from-the-fda-for-ai(<)/a(>). But the developers of foundation models and those involved in constructing datasets should not be exempted from scrutiny—particularly since they are generally the largest and best-resourced actors in the market,69Amba Kak and Sarah Myers West, “General Purpose AI Poses Serious Risks, Should Not Be Excluded from the EU’s AI Act,” policy report, AI Now Institute, April 13, 2023, (<)a href='https://ainowinstitute.org/publication/gpai-is-high-risk-should-not-be-excluded-from-eu-ai-act'(>)https://ainowinstitute.org/publication/gpai-is-high-risk-should-not-be-excluded-from-eu-ai-act(<)/a(>). and have demonstrated a growing propensity for opacity, offering diminishingly few details about how they train their systems.70See Margaret Mitchell et al., “Model Cards for Model Reporting,” FACCT, January 29, 2019, (<)a href='https://doi.org/10.1145/3287560.3287596'(>)https://doi.org/10.1145/3287560.3287596(<)/a(>); Jessica Ji, “What Does AI Red-Teaming Actually Mean?” Center for Security and Emerging Technology (CSET), October 24, 2023, (<)a href='https://cset.georgetown.edu/article/what-does-ai-red-teaming-actually-mean/'(>)https://cset.georgetown.edu/article/what-does-ai-red-teaming-actually-mean(<)/a(>); and Heidy Khlaaf and Sarah Myers West, “Safety Co-Option and Compromised National Security: The Self-Fulfilling Prophecy of Weakened AI Risk Thresholds,” April 21, 2025, (<)a href='https://arxiv.org/abs/2504.15088'(>)https://arxiv.org/abs/2504.15088(<)/a(>). Introducing stronger accountability requirements at the foundation-model layer would motivate restructuring of the development practices, and potentially the operating model, of AI developers. In and of itself, these modes would create greater internal transparency and accountability within AI firms that would convey societal benefits, and aid the work of enforcement agencies when they need to investigate AI companies. Regulatory structures that place the onus solely on the application stage ignore that these developers, as well as purchasers and deployers, may lack the information necessary to assure compliance with existing law, absent upstream intervention.71Lenhart and West, (<)em(>)Lessons from the FDA for AI(<)/em(>). And the end users of AI systems are in many instances not consumers; they’re businesses or public agencies, themselves in need of scrutiny for the way their use of the system affects consumers, workers, and members of the public.

- Require all foundation-model developers to publish detailed information on their websites about the data used to train their systems.

- At each stage of the supply chain, require developers to document and make available their risk-mitigation techniques, as well as mandate disclosure of any areas of foreseen risk they are not positioned to mitigate, so that this is transparent to other actors along the supply chain.

- Institute frameworks of AI use that protect those on whom it is used and introduce healthy friction and democratic decision-making into AI deployment through measures such as a “right to override” decisions, and requirements that councils composed of those affected by the system have a say in whether a system is used at all.

- In safety-critical contexts (e.g., energy-grid allocation, military use, nuclear facilities), prohibit use of foundation models until they have been proven to pass traditional tests for systems safety engineering, and require systems to be built safe by design.

- Require pre-deployment testing and validation of AI applications that examines the safety and efficacy of particular uses.

3. The AI industry shouldn’t be grading

its own homework: Oversight of AI model

development must be independent.

Industry tends to rely too heavily on “red-teaming,” “model cards,” and similar approaches to system evaluation, where companies are the primary actors responsible for determining whether their systems work as intended and for mitigating harm.72OpenAI, “Advancing Red Teaming With People and AI,” November 21, 2024, (<)a href='https://openai.com/index/advancing-red-teaming-with-people-and-ai/'(>)https://openai.com/index/advancing-red-teaming-with-people-and-ai(<)/a(>); Microsoft AI Red Team, (<)em(>)Lessons from Red Teaming 100 Generative AI Products(<)/em(>), Microsoft, 2024, (<)a href='https://ashy-coast-00aeb501e.6.azurestaticapps.net/MS_AIRT_Lessons_eBook.pdf'(>)https://ashy-coast-00aeb501e.6.azurestaticapps.net/MS_AIRT_Lessons_eBook.pdf(<)/a(>); Rebecca Pifer, “CHAI Launches Registry for Health AI Model Cards,” (<)em(>)Healthcare Dive(<)/em(>), February 28, 2025, (<)a href='https://www.healthcaredive.com/news/coalition-health-ai-model-card-registry/741037/'(>)https://www.healthcaredive.com/news/coalition-health-ai-model-card-registry/741037(<)/a(>). This approach has many problems; it ignores, for example, the significant conflicts of interest involved and fails to employ robust methodologies.73Khlaaf and West, “Safety Co-Option and Compromised National Security.”

Companies have asserted that they hold the unique technical expertise necessary to evaluate their own systems74“Fmr. Google CEO Says No One in Government Can Get AI Regulation ‘Right’,” NBC News, May 15, 2023, (<)a href='https://www.nbcnews.com/meet-the-press/video/fmr-google-ceo-says-no-one-in-government-can-get-ai-regulation-right-174442053869'(>)https://www.nbcnews.com/meet-the-press/video/fmr-google-ceo-says-no-one-in-government-can-get-ai-regulation-right-174442053869(<)/a(>). in order to execute what is essentially a fully self-regulatory approach to governance, making a set of “voluntary commitments” to the Biden administration in 2023.75White House, “FACT SHEET: Biden-Harris Administration Secures Voluntary Commitments from Eight Additional Artificial Intelligence Companies to Manage the Risks Posed by AI,” September 12, 2023, (<)a href='https://bidenwhitehouse.archives.gov/briefing-room/statements-releases/2023/09/12/fact-sheet-biden-harris-administration-secures-voluntary-commitments-from-eight-additional-artificial-intelligence-companies-to-manage-the-risks-posed-by-ai/'(>)https://bidenwhitehouse.archives.gov/briefing-room/statements-releases/2023/09/12/fact-sheet-biden-harris-administration-secures-voluntary-commitments-from-eight-additional-artificial-intelligence-companies-to-manage-the-risks-posed-by-ai(<)/a(>). When asked a year later about their progress on those commitments, the response was milquetoast: While companies reported taking actions like engaging in internal testing to mitigate cybersecurity risks, they were unable to validate whether any of those actions actually reduced underlying risk; and by focusing on domains like bioweapons, they ignored the wider universe of harms like consumer and worker exploitation, privacy, and copyright.76Melissa Heikkilä, “AI Companies Promised to Self-Regulate One Year Ago. What’s Changed?” (<)em(>)Technology Review(<)/em(>), July 22, 2024, (<)a href='https://www.technologyreview.com/2024/07/22/1095193/ai-companies-promised-the-white-house-to-self-regulate-one-year-ago-whats-changed/'(>)https://www.technologyreview.com/2024/07/22/1095193/ai-companies-promised-the-white-house-to-self-regulate-one-year-ago-whats-changed(<)/a(>). And OpenAI stepped back from even fulfilling the basic obligations for these commitments in the release of GPT-4.1, opting not to release a model card on the grounds that the model “isn’t at the frontier.”77Maxwell Zeff, “OpenAI Ships GPT-4.1 Without a Safety Report,” (<)em(>)TechCrunch(<)/em(>), April 15, 2025, (<)a href='https://techcrunch.com/2025/04/15/openai-ships-gpt-4-1-without-a-safety-report/'(>)https://techcrunch.com/2025/04/15/openai-ships-gpt-4-1-without-a-safety-report(<)/a(>).

This illustrates a point that by now should be abundantly clear: Companies cannot be responsible for their own self-evaluation, and are poorly incentivized to uphold any promises they make absent the foundation of enforceable law:

- Provide enforcement agencies with the resources and in-house staffing necessary to conduct oversight throughout the AI life cycle, and to hold firms accountable for any violations of law. Any law enforcement agency that enforces the law against companies that use AI should have the ability to detect, investigate, and remediate violations of law using those technologies. As of January 2025, only the Federal Trade Commission and the Consumer Financial Protection Bureau are known to have this capability, and their teams have been significantly reduced.78See Lauren Feiner, “The Technology Team at Financial Regulator CFPB Has Been gutted,” (<)em(>)Verge(<)/em(>), February 14, 2025, (<)a href='https://www.theverge.com/policy/612933/cfpb-tech-team-gutted-trump-doge-elon-musk'(>)https://www.theverge.com/policy/612933/cfpb-tech-team-gutted-trump-doge-elon-musk(<)/a(>); and Lauren Feiner and Alex Heath, “DOGE Has Arrived at the FTC,” (<)em(>)Verge(<)/em(>), April 4, 2025, (<)a href='https://www.theverge.com/news/643674/doge-members-spotted-ftc-elon-musk'(>)https://www.theverge.com/news/643674/doge-members-spotted-ftc-elon-musk.(<)/a(>)

- Require AI companies to submit to independent third-party oversight and testing throughout the AI life cycle, and to make available the necessary information for auditing bodies to conduct evaluation of their models or products.79Laura Weidinger et al., “Toward an Evaluation Science for Generative AI Systems,” (<)em(>)arXiv(<)/em(>), updated March 13, 2025, (<)a href='https://arxiv.org/abs/2503.05336'(>)https://arxiv.org/abs/2503.05336(<)/a(>).

4. Create remedies that strike

at the root of AI power: data.

We don’t need to limit ourselves to the standard AI policy tool kit: A wide range of creative remedies could be deployed by enforcement agencies to strike at the root of AI firms’ power. These include measures such as:

- Require firms to delete ill-gotten data that they have used to train AI models and any related work product generated with that data. Algorithmic deletion remedies require that firms that have benefited from ill-gotten data to train AI models must not only delete the data, but must delete the models trained on that data.80evan Hutson and Ben Winters, “America’s Next ‘Stop Model!’: Model Deletion,” (<)em(>)Georgia Law Technology Review(<)/em(>), September 20, 2022. (<)a href='https://doi.org/10.2139/ssrn.4225003'(>)https://doi.org/10.2139/ssrn.4225003(<)/a(>); Tiffany C. Li, “Algorithmic Destruction,” (<)em(>)Southern Methodist University Law Review(<)/em(>) 75, no. 479 (2022), (<)a href='https://doi.org/10.25172/smulr.75.3.2'(>)https://doi.org/10.25172/smulr.75.3.2(<)/a(>); Cristina Caffarra and Robin Berjon, “‘Google is a Monopolist’—Wrong and Right Ways to Think About Remedies,” (<)em(>)Tech Policy Press(<)/em(>), August 9, 2024, (<)a href='https://www.techpolicy.press/google-is-a-monopolist-wrong-and-right-ways-to-think-about-remedies/'(>)https://www.techpolicy.press/google-is-a-monopolist-wrong-and-right-ways-to-think-about-remedies(<)/a(>). This remedy was developed in the first Trump administration and has now been used in a number of cases including the FTC’s Everalbum and Kurbo.

- Curb secondary use of data collected in one purpose and used in another. AI firms frequently retain data collected under one justification indefinitely for the purpose of training subsequent models. Curbing such secondary uses would both protect the underlying consent regime—ensuring that when users consent to the collection of their data for a particular use it is not treated as an expansive mandate for other uses—and would limit the continual proliferation of data available for model training. The FTC’s Amazon Alexa case offers a useful example, in which the court ruled that Amazon violated COPPA by retaining children’s voiceprints for the purpose of improving its AI models.81United States v. Amazon.com, No. 2:23-cv-00811-TL (W.D. Wash. July 21, 2023); See also Rohit Chopra, “Prepared Remarks of CFPB Director Rohit Chopra at the Federal Reserve Bank of Philadelphia on the Personal Financial Data Rights Rule,” Consumer Financial Protection Bureau, October 22, 2024, (<)a href='https://www.consumerfinance.gov/about-us/newsroom/prepared-remarks-of-cfpb-director-rohit-chopra-at-the-federal-reserve-bank-of-philadelphia-on-the-personal-financial-data-rights-rule/'(>)https://www.consumerfinance.gov/about-us/newsroom/prepared-remarks-of-cfpb-director-rohit-chopra-at-the-federal-reserve-bank-of-philadelphia-on-the-personal-financial-data-rights-rule(<)/a(>).

- Limit hyperscaler partnerships with AI startups. As we explore in Chapter 2, one decisive way Big Tech companies are able to achieve advantages in the AI market is by gaining control of competitive AI companies through partnership terms, investments, or acquisitions. Banning hyperscalers from investing in or partnering with competitive AI companies is one bold, structural measure that would help dismantle hyperscalers’ stronghold on the AI stack. This remedy was initially proposed by the Department of Justice in its case against Google’s search monopoly.82Plaintiffs’ Initial Proposed Final Judgment, United States v. Google, No. 1:20-cv-03010-APM, (D.D.C August 5, 2024) and Colorado v. Google, Case No. 20-cv-3715-APM, (D.D.C. August 5, 2024). While it was later revised, the remedy’s initial inclusion recognizes the kind of bold action required to shape a truly competitive AI market.

- Ban repeat offenders from selling AI to the government. Protections are in place to ensure that those who are selling services and products to the federal government are not engaging in fraud, waste, abuse or antitrust violations. In order to introduce incentives for firms to follow the law, AI companies and salespeople who are eligible should be “debarred” from being able to win federal contracts.83US General Services Administration, “Frequently Asked Questions: Suspension & Debarment,” accessed May 5, 2025, (<)a href='https://www.gsa.gov/policy-regulations/policy/acquisition-policy/office-of-acquisition-policy/gsa-acq-policy-integrity-workforce/suspension-debarment-and-agency-protests/suspension-debarment-faq'(>)https://www.gsa.gov/policy-regulations/policy/acquisition-policy/office-of-acquisition-policy/gsa-acq-policy-integrity-workforce/suspension-debarment-and-agency-protests/suspension-debarment-faq(<)/a(>).

5. We need a sharp-edged competition

tool kit to de-rig the AI market, and how

AI shapes market power across sectors.

Amid concerns of Big Tech dominance in AI growing more prominent in a frenzied market, competition enforcers globally have been far from silent. Over the past few years, we have seen multiple early inquiries into so-called partnership agreements between AI startups and Big Tech hyperscalers;84Federal Trade Commission, (<)em(>)Partnerships Between Cloud Service Providers and AI Developers(<)/em(>), staff report, January 2025, (<)a href='https://www.ftc.gov/system/files/ftc_gov/pdf/p246201_aipartnerships6breport_redacted_0.pdf'(>)https://www.ftc.gov/system/files/ftc_gov/pdf/p246201_aipartnerships6breport_redacted_0.pdf(<)/a(>); European Commission, “Commission Launches Call for Contributions on Competition in Virtual Worlds and Generative AI,” press release, January 8, 2024, (<)a href='https://ec.europa.eu/commission/presscorner/detail/en/ip_24_85'(>)https://ec.europa.eu/commission/presscorner/detail/en/ip_24_85(<)/a(>); Competition and Markets Authority, “Microsoft / OpenAI Partnership Merger Inquiry,” Gov.UK, April 15, 2025, (<)a href='https://www.gov.uk/cma-cases/microsoft-slash-openai-partnership-merger-inquiry'(>)https://www.gov.uk/cma-cases/microsoft-slash-openai-partnership-merger-inquiry(<)/a(>). several market studies into concentration in the cloud market, from Japan to the Netherlands to the UK to the US;85Japan Fair Trade Commission, “Report Regarding Cloud Services,” press release, June 28, 2022, (<)a href='https://www.jftc.go.jp/en/pressreleases/yearly-2022/June/220628.html'(>)https://www.jftc.go.jp/en/pressreleases/yearly-2022/June/220628.html(<)/a(>); Authority for Consumers and Markets, “Market Study into Cloud Services,” May 9, 2022, (<)a href='https://www.acm.nl/en/publications/market-study-cloud-services'(>)https://www.acm.nl/en/publications/market-study-cloud-services(<)/a(>); Competition and Markets Authority, (<)em(>)Cloud Services Market Investigation(<)/em(>), January 28, 2025, (<)a href='https://assets.publishing.service.gov.uk/media/67989251419bdbc8514fdee4/summary_of_provisional_decision.pdf'(>)https://assets.publishing.service.gov.uk/media/67989251419bdbc8514fdee4/summary_of_provisional_decision.pdf(<)/a(>); Nick Jones, “Cloud Computing FRI: What We Heard and Learned,” FTC(<)em(>) (<)/em(>)(blog), November 16, 2023, (<)a href='https://www.ftc.gov/policy/advocacy-research/tech-at-ftc/2023/11/cloud-computing-rfi-what-we-heard-learned'(>)https://www.ftc.gov/policy/advocacy-research/tech-at-ftc/2023/11/cloud-computing-rfi-what-we-heard-learned(<)/a(>). and detailed reports highlighting potential choke points and competition risks across the AI supply chain.86Competition and Markets Authority, (<)em(>)AI Foundation Models Update Paper(<)/em(>), April 11, 2024, (<)a href='https://assets.publishing.service.gov.uk/media/661941a6c1d297c6ad1dfeed/Update_Paper__1_.pdf'(>)https://assets.publishing.service.gov.uk/media/661941a6c1d297c6ad1dfeed/Update_Paper__1_.pdf(<)/a(>); Office of Technology, “Tick, Tick, Tick. Office of Technology’s Summit on AI,” FTC, January 18, 2024, (<)a href='https://www.ftc.gov/policy/advocacy-research/tech-at-ftc/2024/01/tick-tick-tick-office-technologys-summit-ai'(>)https://www.ftc.gov/policy/advocacy-research/tech-at-ftc/2024/01/tick-tick-tick-office-technologys-summit-ai(<)/a(>). The DOJ, FTC, CMA, and EC even issued a joint statement on competitive concerns with AI, warning that partnerships and investments could be used to undermine competition.87Federal Trade Commission, “FTC, DOJ, and International Enforcers Issue Joint Statement on AI Competition Issues,” press release, July 23, 2024, (<)a href='https://www.ftc.gov/news-events/news/press-releases/2024/07/ftc-doj-international-enforcers-issue-joint-statement-ai-competition-issues'(>)https://www.ftc.gov/news-events/news/press-releases/2024/07/ftc-doj-international-enforcers-issue-joint-statement-ai-competition-issues(<)/a(>). This is already a promising departure from the past decade of a passive stance toward the consumer tech industry, which has left regulators struggling to catch up to remedy harm—from Facebook’s acquisitions of WhatsApp and Instagram88Laya Neelakandan, “Meta Resorted to ‘Buy-or-Bury Scheme’ With Instagram and WhatsApp Deals, Former FTC Chair Lina Khan Says,” CNBC, April 14, 2025, (<)a href='https://www.cnbc.com/2025/04/14/former-ftc-chair-khan-meta-acquisitions-instagram-whatsapp.html'(>)https://www.cnbc.com/2025/04/14/former-ftc-chair-khan-meta-acquisitions-instagram-whatsapp.html(<)/a(>). to Google’s sprawling search monopoly.89Michael Liedtke, “Google’s Digital Ad Network Declared an Illegal Monopoly, Joining Its Search Engine in Penalty Box,” Associated Press, April 17, 2025, (<)a href='https://apnews.com/article/google-illegal-monopoly-advertising-search-a1e4446c4870903ed05c03a2a03b581e'(>)https://apnews.com/article/google-illegal-monopoly-advertising-search-a1e4446c4870903ed05c03a2a03b581e(<)/a(>).

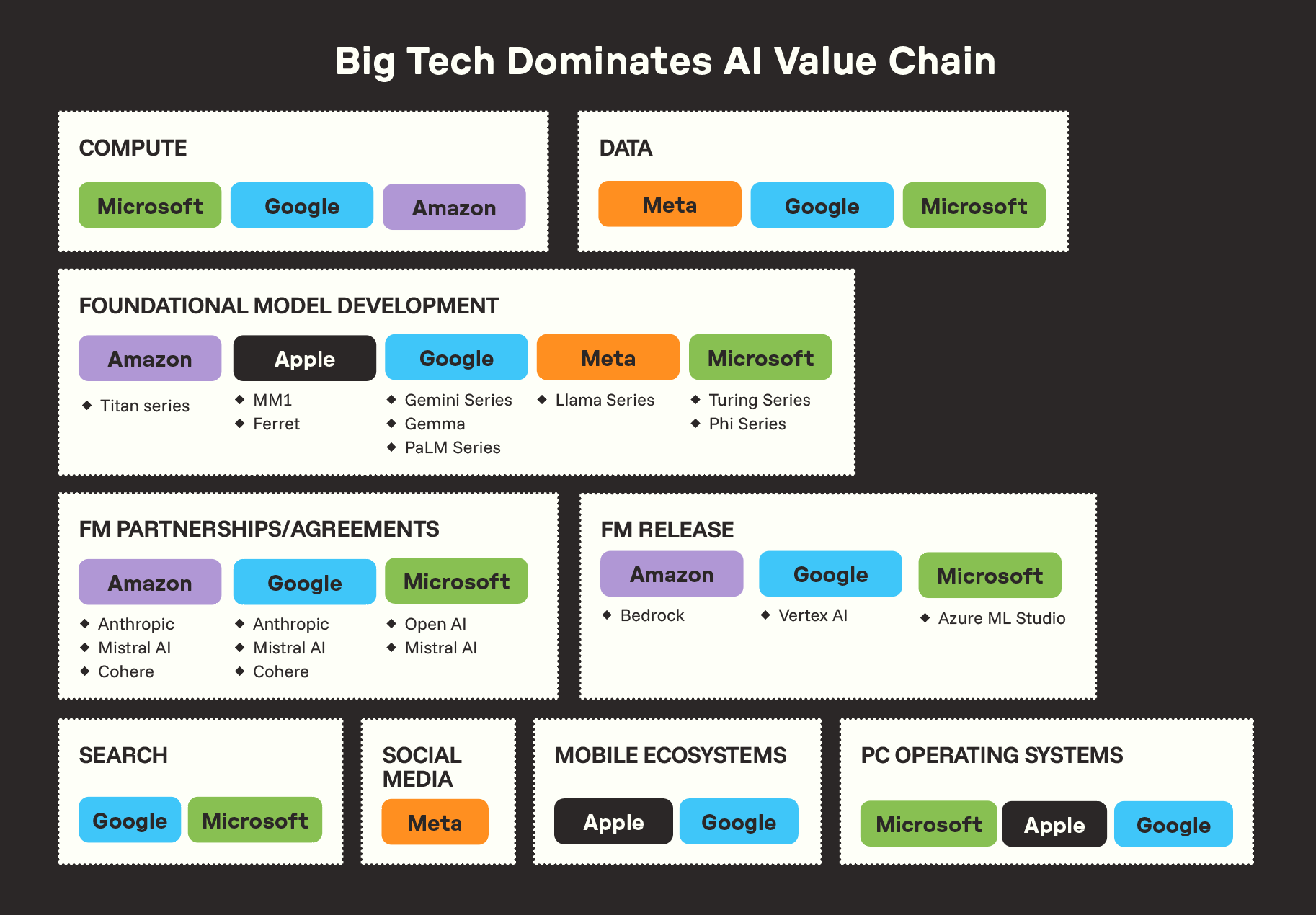

Yet, despite many of the “right noises,” much of this activity failed to materialize into concrete enforcement action and legislative change, or to draw bright lines prohibiting specific anticompetitive business practices. A familiar dynamic has consisted of limited and restrictive interpretations of existing legal standards for enforcement action. For example, traditional merger review conditions are unable to capture more indirect means of dependence and control between AI startups and cloud hyperscalers. Or consider the threshold in the US’s Hart-Scott-Rodino Act, which meant that smaller acquisitions by Big Tech firms were not subject to scrutiny, like Rockset (acquired by OpenAI), Deci AI (acquired by NVIDIA), OctoAI (also acquired by NVIDIA). Across these issues persists a more fundamental limitation: the artificial blinkers that prevent regulators from fully grappling with the many dimensions of power and control that Big Tech firms exercise through their sprawling ecosystems. A recent report from the Competition and Markets Authority (CMA) in the UK published a diagram (see Fig 2) depicting the presence of Amazon, Apple, Google, Meta, and Microsoft across the AI supply chain—illuminating the ways in which their presence across these markets allows for network effects, data feedback loops, and unparalleled access to monetizable markets for their AI products.90Competition and Markets Authority, (<)em(>)AI Foundation Models Technical Update Report(<)/em(>), April 16, 2024, (<)a href='https://assets.publishing.service.gov.uk/media/661e5a4c7469198185bd3d62/AI_Foundation_Models_technical_update_report.pdf'(>)https://assets.publishing.service.gov.uk/media/661e5a4c7469198185bd3d62/AI_Foundation_Models_technical_update_report.pdf(<)/a(>). (We canvass these in Chapter 2: Heads I Win, Tails You Lose.)

Fig 2: How Amazon, Apple, Google, Meta, and Microsoft permeate the AI supply chain. Source: CMA; Graphic Design: Mozilla Foundation.

Yet the irony is that the CMA, among other counterparts, has fallen short of acting in ways that might interrupt this ecosystem control. The scope for intervention is limited as much by restrictive legal thresholds as it is by a corporate lobbying environment around AI that pits any market intervention not only as anti-innovation (see Chapter 1.4: Recasting Regulation), but as fundamentally harmful to the national interest given the stakes of the so-called AI arms race (see Chapter 1.3: AI Arms Race 2.0). Staying with the UK example, Microsoft announced a massive investment in compute on the heels of two significant enforcement actions affecting Microsoft: the CMA’s decision not to pursue a block of the Microsoft-Activision merger, and the opening of an investigation by the CMA into competition concerns in the cloud market. AI firms use investment as a clear play to hedge against the very real risk that the CMA will take action in ways that would threaten the companies’ bottom line.

Looking ahead, we see an opportunity to corral around a set of targeted interventions that could tackle Big Tech’s control over the broader tech ecosystem, and could go a long way in shifting the “rigged” dynamics of the AI market (see Chapter 2: Heads I Win, Tails You Lose). We spotlight some key policy directions here that identify high-value intervention points.91States might be ideal testing grounds here, especially as many of these moves might require legislative changes. A recent report by the Institute for Local Self Reliance identifies state antitrust laws as being ripe for enforcement and legislative reform—pointing to enforcement victories in Washington, Colorado, Arizona, and New York, among other states——as well as successful attempts at passing targeted legislative reforms giving these enforcers better tools to grapple with anticompetitive conduct. See Ron Knox, (<)em(>)The State(s) of Antitrust: How States Can Strengthen Monopoly Laws and Get Enforcement Back on Track(<)/em(>), Institute for Local Self-Reliance, April 2025, (<)a href='https://ilsr.org/wp-content/uploads/2025/03/ILSR-StatesofAntitrust.pdf'(>)https://ilsr.org/wp-content/uploads/2025/03/ILSR-StatesofAntitrust.pdf(<)/a(>); and Knox, “Mapping Our State Antitrust Laws,” Institute for Local Self-Reliance, April 1, 2025, (<)a href='https://ilsr.org/articles/state-antitrust-law-database-and-map/'(>)https://ilsr.org/articles/state-antitrust-law-database-and-map(<)/a(>).

- Structural separation to prevent vertical integration across the AI supply chain, especially targeting infrastructural and model layer advantage, including the following:

- Prevent cloud companies from participating in the market for AI foundation models.

- Require that Big Tech firms divest their cloud computing businesses from the rest of their corporate structure, and that they operate as wholly independent entities.

- Prevent AI foundation model companies from participating in other markets related to AI.

- Prevent chip designers from investing in AI development companies.