DOWNLOAD FULL PDF

Situating the Current Political Moment

The European Union stands on the brink of change. Its economic outlook remains uncertain, exacerbated by a cost-of-living crisis,1European Parliament, “Europeans Concerned by Cost of Living Crisis and Expect Additional EU Measures,” press release, January 12, 2023, (<)a href='https://www.europarl.europa.eu/news/en/press-room/20230109IPR65918/europeans-concerned-by-cost-of-living-crisis-and-expect-additional-eu-measures'(>)https://www.europarl.europa.eu/news/en/press-room/20230109IPR65918/europeans-concerned-by-cost-of-living-crisis-and-expect-additional-eu-measures(<)/a(>). growing geopolitical tensions, the aftershocks of COVID-19 supply shortages, and an energy crisis caused by Russia’s ongoing war against Ukraine. Despite numerous lawsuits, cases, and major court rulings against Big Tech, Europe has failed to meaningfully intervene in the lopsided and heavily concentrated global tech sector. Meanwhile, a rise of far-right political parties in key Member States challenges some of the core principles of the European project: the idea of an “ever closer union,” democratic norms, fundamental rights, and the rule of law. Against this backdrop, the pressure is on for the new European Commission to deliver—not just on economic growth and prosperity, but on securing a more independent and competitive place in the world that could make the EU more resilient against future shocks.

Combined, these challenges have ignited a historical rethinking of the core tenets of EU economic policy, and a return to a more active statecraft in the form of industrial policy.2For why this is historically significant, see Max von Thun, “To Innovate or to Regulate? The False Dichotomy at the Heart of Europe’s Industrial Approach,” (<)em(>)AI Nationalism(s)(<)/em(>), AI Now Institute, March 12, 2024, (<)a href='https://ainowinstitute.org/publication/to-innovate-or-to-regulate-the-false-dichotomy'(>)https://ainowinstitute.org/publication/to-innovate-or-to-regulate-the-false-dichotomy(<)/a(>). In September 2024, Mario Draghi, the former prime minister of Italy and the president of the European Central Bank, published his anticipated report on the competitiveness and future of the European Union. The report, in line with one published in April by another former Italian prime minister, Enrico Letta, calls for a transformative change in European policy to address the relative decline of the European Union, proposing an €800 billion increase in annual public and private investment, as well as reforms in trade, internal market, and regulatory harmonization, among other proposals. To be sure, traditional regulatory levers are a part of this renovation, too—both Draghi’s report and Commission President Ursula von der Leyen’s political roadmap call for “a new approach to competition policy” that enables European companies to scale up and consolidate3Max von Thun, “Europe Must Not Tie Its Hands in the Fight Against Corporate Power,” (<)em(>)Financial Times(<)/em(>), September 19, 2024, (<)a href='https://www.ft.com/content/cc4d2249-55af-4763-af7b-5a31cf254e2d'(>)https://www.ft.Europe must not tie its hands in the fight against corporate powercom/content/cc4d2249-55af-4763-af7b-5a31cf254e2d(<)/a(>)., while also considering resilience and ensuring a level playing field. These calls have created a vibrant policy opening, in which interest groups are positioning themselves to shape this new industrial policy within existing political and institutional constraints.

AI & Industrial Policy in Europe

As we argue throughout this report, in the absence of a clear public interest vision for Europe’s (technological) future, three scenarios become more likely: public money will be spent in contradictory, insufficient, or incoherent ways; public money will primarily benefit incumbent digital giants who already dominate the AI ecosystem; or (as Seda Gürses and Sarah Chander argue in Chapter V) public budgets will shift toward market-driven, surveillant, punitive, or extractive technologies that are framed as solutions for complex problems.

It is within this environment of anxieties around Europe’s decline and pathways to its resurrection that AI has again risen to the front stage of policy. Its position as a laggard in the AI “race” has become a symbol for the continent’s real and perceived lack of competitiveness and digital sovereignty, especially compared to the US and China. At the same time, AI’s potential is situated as central to a whole range of complex problems the continent is facing: the climate crisis, slowing economic growth, and deteriorating public services.4Although the EU’s nascent industrial policy encompasses far more than just AI and digital transformation, artificial intelligence occupies a central place in the narrative about Europe’s decline and possible resurrection.

There is historical precedent for anxieties around national competitiveness encouraging more active industrial policies in Europe. Already in the 1970–80s, concerns over the decline in competitiveness against Japan and the United States led to familiar calls for increased investment in high-tech industries.5Filippo Bontadini et al., (<)em(>)EU Industrial Policy Report 2024(<)/em(>), Luiss Institute for European Analysis and Policy, September 2024, (<)a href='https://leap.luiss.it/luhnip-eu-industrial-policy-report-2024/#:~:text=The%20EU%20Industrial%20Policy%20Report,economic%20transformations%2C%20and%20environmental%20imperatives.'(>)https://leap.luiss.it/luhnip-eu-industrial-policy-report-2024.(<)/a(>) In 1993, Jacques Delors ascribed Europe’s dire economic situation to a lack of investment in high-tech industries in the face of a changing world.6Paul Krugman, “Competitiveness: A Dangerous Obsession,” (<)em(>)Foreign Affairs(<)/em(>), March 1, 1994, (<)a href='https://www.foreignaffairs.com/articles/1994-03-01/competitiveness-dangerous-obsession'(>)https://www.foreignaffairs.com/articles/1994-03-01/competitiveness-dangerous-obsession(<)/a(>). Today, artificial intelligence forms a specific part of this competitiveness frame. In Draghi’s narrative, the lack of EU hyperscalers and the dominance of US firms in foundation model development are emblematic of Europe’s deficiencies and a continuation of previous failures to capitalize on technological waves. According to this narrative, this not only testifies to a lack of digital competitiveness and technological sovereignty but also risks Europe falling even further behind in global value chains. EU policymakers increasingly assume that investing in AI and integrating it into traditional industries is essential for generating economic growth and enhancing productivity across the EU.

Although it is still early days for industrial policy in this sector, initiatives are in motion that make for good case studies to tease out the early contours of the vision. Initiatives such as the European Commission’s €3 billion innovation package for AI startups and SMEs, the European Chips Act, and the investment and regulatory recommendations outlined in the Draghi report highlight the EU’s focus on fostering AI adoption across key industrial sectors and public services, while nurturing and growing regional and national AI economies—and the industries that underpin them. In practice, this includes plans to unlock new financing from private and public sources, build and coordinate European AI capacities (diffusing them across selected industries), cut down regulatory barriers, and harmonize the European market for AI.

While we’re still some way from a clear and coherent vision animating these public investments in AI, a few features are already coming to the fore. For one, AI—particularly large-scale AI—is viewed as a technology where EU companies still have a fighting chance at leadership, and efforts seem to be directed at identifying these winning niches. There are stray references to broader social and environmental goals—like the Draghi report positioning AI as key to defending the EU’s social model and enabling the green transition—but, as we explore in this collection, these claims are largely asserted and the assumptions don’t stand up to scrutiny.

Status Quo: Where Does Europe Stand on AI?

Despite Europe’s lack of homegrown digital platforms that marked the latest phase of the digital economy, in policy debates European AI startups are still often seen as potentially on a path to catching up with their US competitors due to the EU’s long tradition of academic research and expertise. The public attention garnered by exemplars like France’s Mistral AI and Germany’s Aleph Alpha, as well as dreams of building a European large-scale AI, however, obscure how intertwined with the global AI stack the European AI market is.

The large-scale AI market as we know it today is characterized by both horizontal and vertical concentration of power. Incumbent digital firms shape key inputs to large-scale AI: computing, data, capital, and talent; as well as distribution networks to access customers, risking further entrenchment or expansion of their market power.7Federal Trade Commission, “FTC, DOJ, and International Enforcers Issue Joint Statement on AI Competition Issues,” press release, July 23, 2024, (<)a href='https://www.ftc.gov/news-events/news/press-releases/2024/07/ftc-doj-international-enforcers-issue-joint-statement-ai-competition-issues'(>)https://www.ftc.gov/news-events/news/press-releases/2024/07/ftc-doj-international-enforcers-issue-joint-statement-ai-competition-issues(<)/a(>). As a result, most leading AI startups, such as Anthropic and OpenAI, have entered into lopsided partnerships with tech giants, trading financial and compute capacity for access to their models. Potential competitors in the downstream AI market, such as Adept, Character.ai, or Inflection AI have recently been de facto acquired by the large hyperscalers with mergers and agreements that effectively sidestep merger regulations.8Alex Heath, “This is Big Tech’s Playbook for Swallowing the AI Industry,” (<)em(>)Verge(<)/em(>), July 1, 2024, (<)a href='https://www.theverge.com/2024/7/1/24190060/amazon-adept-ai-acquisition-playbook-microsoft-inflection'(>)https://www.theverge.com/2024/7/1/24190060/amazon-adept-ai-acquisition-playbook-microsoft-inflection(<)/a(>). And while global competition authorities have raised concerns around the negative impacts of these business arrangements, they’ve stopped short of issuing remedies that would truly curtail their power or restructure the market toward a more level playing field.9Competition authorities such as the EU Directorate-General of Competition (DG COMP), the UK’s Competition and Markets Authority (CMA), and the Federal Trade Commission (FTC) have argued that such strategic investments and partnerships can undermine competition and manipulate market outcomes to their advantage. See Federal Trade Commission, “FTC, DOJ, and International Enforcers Issue Joint Statement on AI Competition Issues.”

Large US incumbents are uniquely positioned to shape the direction of downstream large-scale AI innovation. In the US, for instance, corporate giants Microsoft, Google, and Amazon vastly outspent traditional Silicon Valley investors in deals with AI startups in 2023.10(<)a href='https://www.ft.com/george-hammond'(>)George Hammond, “Big Tech Outspends Venture Capital Firms in AI Investment Frenzy,” (<)em(>)Financial Times(<)/em(>), December 29, 2024, (<)/a(>)(<)a href='https://www.ft.com/content/c6b47d24-b435-4f41-b197-2d826cce9532'(>)https://www.ft.com/content/c6b47d24-b435-4f41-b197-2d826cce9532(<)/a(>). In addition, beyond directly managing the inputs and distribution of the AI supply-chain, Big Tech also shapes the market indirectly. By dominating the AI ecosystem, it shapes the incentives and strategies of other actors through deterring entry to certain markets, encouraging innovation trajectories that complement their existing offering and nurturing competition in what Cecilia Rikap calls “periphery,”11Cecilia Rikap, “Dynamics of Corporate Governance Beyond Ownership in AI,” Common Wealth, May 15, 2024, (<)a href='https://www.common-wealth.org/publications/dynamics-of-corporate-governance-beyond-ownership-in-ai'(>)https://www.common-wealth.org/publications/dynamics-of-corporate-governance-beyond-ownership-in-ai(<)/a(>). the startup orbit that gradually gets increasingly entangled with these ecosystems. The “level playing field,” a staple of EU policy talk, remains an elusive mirage.

In our research, we have observed a number of ways in which European companies have tried to position themselves vis-à-vis this dominant ecosystem.

Nascent European AI companies tend to move away from the purportedly capital-intensive business of training cutting-edge AI models toward downstream applications, developing software applications that depend on existing models and cloud infrastructure. The majority of closed foundation models are still developed in the US. The largely French and German startups in our sample, like Mistral AI and DeepL, and newer initiatives like Black Forest Labs or Poolside are the exception in that they are still competing on large-scale model making. The increasing costs of training and the wide availability of commoditized open-source models like Meta’s Llama series, however, have challenged paid subscription-based business models. For companies, the benefit of developing their own models might be crowded out by the increasingly capable open source models. Some high-profile examples in Europe, such as Aleph Alpha, recently announced a shift away from training their own models to pivot toward AI support and facilitation.12Mark Bergen, “The Rise and Pivot of Germany’s One-Time AI Champion,” Bloomberg, September 5, 2024, (<)a href='https://www.bloomberg.com/news/articles/2024-09-05/the-rise-and-pivot-of-germany-s-one-time-ai-champion'(>)https://www.bloomberg.com/news/articles/2024-09-05/the-rise-and-pivot-of-germany-s-one-time-ai-champion(<)/a(>). Also, companies still developing their own models, like Mistral, are also hinting at moving toward providing a platform for developers to use as the key product.13See the comment by Mistral’s cofounder in a recent interview: “This is the product that we are building: the developer platform that we host ourselves, and then serve through APIs and managed services, but that we also deploy with our customers that want to have full control over the technology, so that we give them access to the software, and then we disappear from the loop. So that gives them sovereign control over the data they use in their applications, for instance.” Will Henshall, “Mistral AI CEO Arthur Mensch on Microsoft, Regulation, and Europe’s AI Ecosystem,” (<)em(>)Time(<)/em(>), May 22, 2024, (<)a href='https://time.com/7007040/mistral-ai-ceo-arthur-mensch-interview/'(>)https://time.com/7007040/mistral-ai-ceo-arthur-mensch-interview(<)/a(>). These examples signal a further potential consolidation in the number of companies developing large-scale AI models.14Here, however, some important initiatives in the EU AI ecosystem are developing completely open source models. See Romain Dillet, “Kyutai Is a French AI Research Lab with a $330 Million Budget That Will Make Everything Open Source,” (<)em(>)TechCrunch(<)/em(>), November 17, 2023, (<)a href='https://techcrunch.com/2023/11/17/kyutai-is-an-french-ai-research-lab-with-a-330-million-budget-that-will-make-everything-open-source/'(>)https://techcrunch.com/2023/11/17/kyutai-is-an-french-ai-research-lab-with-a-330-million-budget-that-will-make-everything-open-source(<)/a(>).

In an effort to avoid directly competing with the hyperscale ecosystems and their dominance over distribution networks, European AI companies have tried to position themselves toward alternative or complementary markets. This has meant focusing on specific markets by, for example, selling AI systems directly to large businesses15“An LLM fine-tuned for your use case,” Silo AI, accessed October 12, 2024, (<)a href='https://www.silo.ai/silogen'(>)https://www.silo.ai/silogen(<)/a(>). and governments;16See “PhariaAI: The Sovereign Full-Stack Solution for Your Transformation Into the AI Era,” Aleph Alpha, accessed October 12, 2024, (<)a href='https://aleph-alpha.com/phariaai/'(>)https://aleph-alpha.com/phariaai(<)/a(>). integrating artificial intelligence with existing industrial products; or cooperating with sectoral champions—see for instance Owkin’s partnership with Sanofi on drug discovery,17See Owkin, “Owkin Expands Collaboration with Sanofi to Apply AI for Drug Positioning in Immunology,” press release, March 21, 2024, (<)a href='https://www.owkin.com/newsfeed/owkin-expands-collaboration-with-sanofi-leveraging-ai-for-drug-positioning-in-immunology'(>)https://www.owkin.com/newsfeed/owkin-expands-collaboration-with-sanofi-leveraging-ai-for-drug-positioning-in-immunology(<)/a(>). or the partnership between Saab and military AI startup Helsing.18Saab, “Saab Signs Strategic Cooperation Agreement and Makes Investment in Helsing,” press release, September 14, 2023, (<)a href='https://www.saab.com/newsroom/press-releases/2023/saab-signs-strategic-cooperation-agreement-and-makes-investment-in-helsing'(>)https://www.saab.com/newsroom/press-releases/2023/saab-signs-strategic-cooperation-agreement-and-makes-investment-in-helsing(<)/a(>). These efforts are expected to intensify through Draghi’s newly proposed initiative EU Vertical AI Priorities Plan. The plan supports vertical cooperation in AI adoption “while duly safeguarded from EU antitrust enforcement, to encourage systematic cooperation between leading EU companies for generative AI and EU-wide industrial champions in key sectors” such as automotive, manufacturing, and telecoms.19European Commission, (<)em(>)The Future of European Competitiveness: Part B, In-Depth Analysis and Recommendations(<)/em(>), September 2024, 83, (<)a href='https://commission.europa.eu/document/download/ec1409c1-d4b4-4882-8bdd-3519f86bbb92_en?filename=The%20future%20of%20European%20competitiveness_%20In-depth%20analysis%20and%20recommendations_0.pdf'(>)https://commission.europa.eu/document/download/ec1409c1-d4b4-4882-8bdd-3519f86bbb92_en(<)/a(>). While less visible than the customer-facing generative AI offerings, these industrial partnerships form a substantial part of the reality of the European AI ecosystem.

European companies also attempt to differentiate themselves through alternative moats. Instead of pushing the frontier of AI within the current paradigm of building ever-larger models and chasing state-of-the-art model capabilities, European companies emphasize compliance, trust, control, sovereignty, calibrated models, customization, and “Europeanness” as a competitive advantage in the market. A nascent ecosystem of auditing, compliance, and assurance is emerging in which compliance with applicable European regulations is used as one way to protect the market share of European companies.20See “Aleph Alpha Launches PhariaAI: The Enterprise-Grade Operating System for Generative AI Combining Future-Proof Sovereign Design with LLM Explainability and Compliance,” Aleph Alpha, August 26, 2024, (<)a href='https://aleph-alpha.com/aleph-alpha-launches-phariaai-the-enterprise-grade-operating-system-for-generative-ai-combining-future-proof-sovereign-design-with-llm-explainability-and-compliance/'(>)https://aleph-alpha.com/aleph-alpha-launches-phariaai-the-enterprise-grade-operating-system-for-generative-ai-combining-future-proof-sovereign-design-with-llm-explainability-and-compliance(<)/a(>); and Emmanuel Cassimatis, “SAP Continues to Expand Its Partnership with Mistral AI to Broaden Customer Choice,” SAP, October 9, 2024, (<)a href='https://news.sap.com/2024/10/sap-mistral-ai-partnership-expands-broaden-customer-choice/'(>)https://news.sap.com/2024/10/sap-mistral-ai-partnership-expands-broaden-customer-choice(<)/a(>). This might create new pressures to streamline the interpretation of the key regulations in a way that is conducive to the interests of European AI companies, while still maintaining the competitive advantage vis-à-vis US hyperscalers. However, the long-term sustainability of these moats against the creeping consolidation of the global AI ecosystem is still uncertain, with leading hyperscalers positioning in these markets as well.21See Takeshi Numoto, “Microsoft Trustworthy AI: Unlocking Human Potential Starts with Trust,” Microsoft (blog), September 24, 2024, (<)a href='https://blogs.microsoft.com/blog/2024/09/24/microsoft-trustworthy-ai-unlocking-human-potential-starts-with-trust'(>)https://blogs.microsoft.com/blog/2024/09/24/microsoft-trustworthy-ai-unlocking-human-potential-starts-with-trust(<)/a(>); “Introducing ChatGPT Enterprise,” OpenAI, August 28, 2023, (<)a href='https://openai.com/index/introducing-chatgpt-enterprise'(>)https://openai.com/index/introducing-chatgpt-enterprise(<)/a(>); and “Delivering Digital Sovereignty to EU Governments,” sponsored content from Microsoft, (<)em(>)Politico(<)/em(>), accessed October 12, 2024, (<)a href='https://www.politico.eu/sponsored-content/delivering-digital-sovereignty-to-eu-governments'(>)https://www.politico.eu/sponsored-content/delivering-digital-sovereignty-to-eu-governments(<)/a(>).

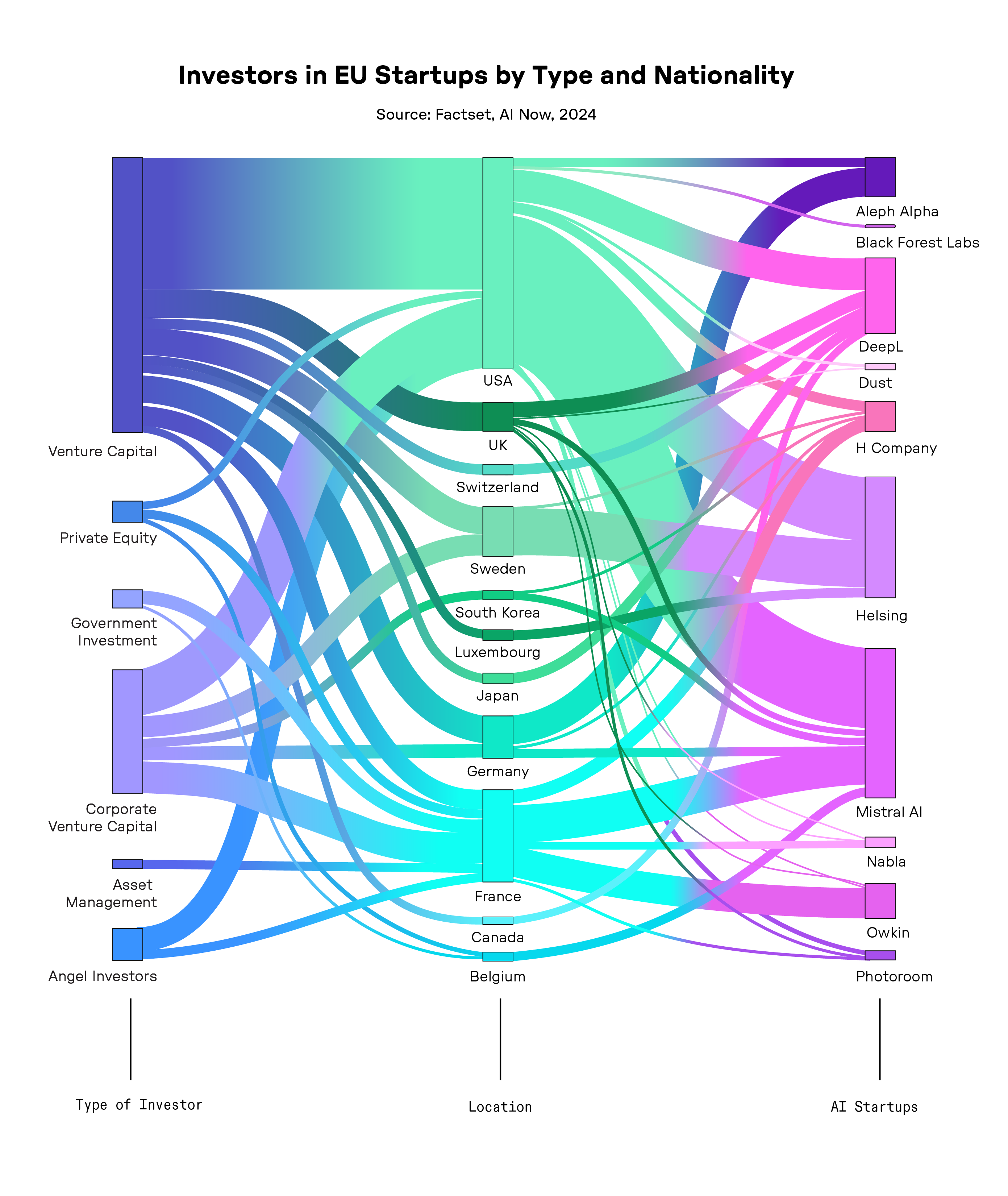

Analysis of a sample of the European AI market shows that early- and growth-stage sources of capital in the European AI market are more variegated than in the United States. In our sample of prominent EU AI companies, finance in the scale-up and growth phases consists of a mixture of local billionaires,22See Miriam Partington, “Germany’s Richest Man Wants to Ensure Europe Has an OpenAI Rival,” (<)em(>)Sifted(<)/em(>), October 26, 2023, (<)a href='https://sifted.eu/articles/heilbronn-franken-ai'(>)https://sifted.eu/articles/heilbronn-franken-ai(<)/a(>); and Mark Bergen, “French Billionaire Xavier Niel Is Building a ChatGPT Competitor with a ‘Thick French Accent’,” Bloomberg, July 4, 2024, (<)a href='https://fortune.com/europe/2024/07/04/ai-lab-french-billionaire-xavier-niel-takes-on-chatgpt-reveals-voice-assistant-with-thick-french-accent/'(>)https://fortune.com/europe/2024/07/04/ai-lab-french-billionaire-xavier-niel-takes-on-chatgpt-reveals-voice-assistant-with-thick-french-accent(<)/a(>). public funding through national development banks,23Bpifrance, “Bpifrance Supports French Companies in the Artificial Intelligence Revolution,” June 30, 2023, (<)a href='https://www.bpifrance.com/2023/06/30/bpifrance-supports-french-companies-in-the-artificial-intelligence-revolution/'(>)https://www.bpifrance.com/2023/06/30/bpifrance-supports-french-companies-in-the-artificial-intelligence-revolution(<)/a(>). existing sectoral champions,24See Saab, “Saab Signs Strategic Cooperation Agreement and Makes Investment in Helsing”; and Owkin, “Owkin Becomes ‘Unicorn’ with $180M Investment from Sanofi,” press release, November 18, 2021, (<)a href='https://www.owkin.com/newsfeed/owkin-becomes-unicorn-with-180m-investment-from-sanofi-and-four-new-collaborative-projects'(>)https://www.owkin.com/newsfeed/owkin-becomes-unicorn-with-180m-investment-from-sanofi-and-four-new-collaborative-projects(<)/a(>). corporate venture investments,25Kyle Wiggers, “AI Coding Startup Poolside Raises $500M from eBay, Nvidia, and Others,” (<)em(>)TechCrunch(<)/em(>), October 2, 2024, (<)a href='https://techcrunch.com/2024/10/02/ai-coding-startup-poolside-raises-500m-from-ebay-nvidia-and-others/'(>)https://techcrunch.com/2024/10/02/ai-coding-startup-poolside-raises-500m-from-ebay-nvidia-and-others(<)/a(>). and international (primarily US) venture capital.26See Jeannette zu Fürstenberg, Hemant Taneja, Quentin Clark, and Alexandre Momeni, “Tripling Down on Mistral AI,” General Catalyst, June 11, 2024, (<)a href='https://www.generalcatalyst.com/perspectives/tripling-down-on-mistral-ai'(>)https://www.generalcatalyst.com/perspectives/tripling-down-on-mistral-ai(<)/a(>); Lightspeed, “Partnering with Helsing, Europe’s Leader in AI Enabled Defense,” July 11, 2024, (<)a href='https://lsvp.com/stories/partnering-with-helsing-europes-leader-in-ai-enabled-defense/'(>)https://lsvp.com/stories/partnering-with-helsing-europes-leader-in-ai-enabled-defense(<)/a(>); and Supantha Mukherjee, “VC Firm Accel Raises $650 Mln to Invest in AI, Cybersecurity Startups,” Reuters, May 13, 2024, (<)a href='https://www.reuters.com/business/finance/vc-firm-accel-raises-650-mln-invest-ai-cybersecurity-startups-2024-05-13/'(>)https://www.reuters.com/business/finance/vc-firm-accel-raises-650-mln-invest-ai-cybersecurity-startups-2024-05-13(<)/a(>).

These patterns lead to a different and more complex political economy in AI development, with different actors, timescales to profitability, and logics of operation than US AI startups, whose funding is often anchored in the existing surpluses of tech giants.27For instance, research has identified some key limitations in the kinds of technologies the VC-funding model is equipped to fund. Due to the short-term nature and rapid scalability imperative underlying the VC funding model, the investments are often directed at readily commercializable products, such as software and products. More transformative and long-term investments, such as the buildup of public digital infrastructure, do not easily fit this framework. See Josh Lerner and Ramana Nanda, “Venture Capital’s Role in Financing Innovation: What We Know and How Much We Still Need to Learn,” (<)em(>)Journal of Economic Perspectives(<)/em(>) 34, no. 3 (Summer 2020): 237–261, (<)a href='https://www.aeaweb.org/articles?id=10.1257/jep.34.3.237'(>)https://www.aeaweb.org/articles?id=10.1257/jep.34.3.237(<)/a(>). However, for the scale-up phase, Europe lacks the equivalent of Silicon Valley, in which corporate giants and a deep venture capital ecosystem have underwritten the stupefying costs of scalable AI infrastructure. This perceived gap has led to recent calls to free up the assets in European pension and insurance funds to facilitate the scaling of European AI start-ups through strengthening the capital markets union and relaxing financial regulations.28Chiara Fratto, Matteo Gatti, Anastasia Kivernyk, Emily Sinnott, and Wouter van der Wielen, “The Scale-Up Gap: Financial Market Constraints Holding Back Innovative Firms in the European Union,” European Investment Bank, July 2024, (<)a href='https://www.eib.org/attachments/lucalli/20240130_the_scale_up_gap_en.pdf'(>)https://www.eib.org/attachments/lucalli/20240130_the_scale_up_gap_en.pdf(<)/a(>). This creates new beneficiaries and reorients capital flows in European capital markets.

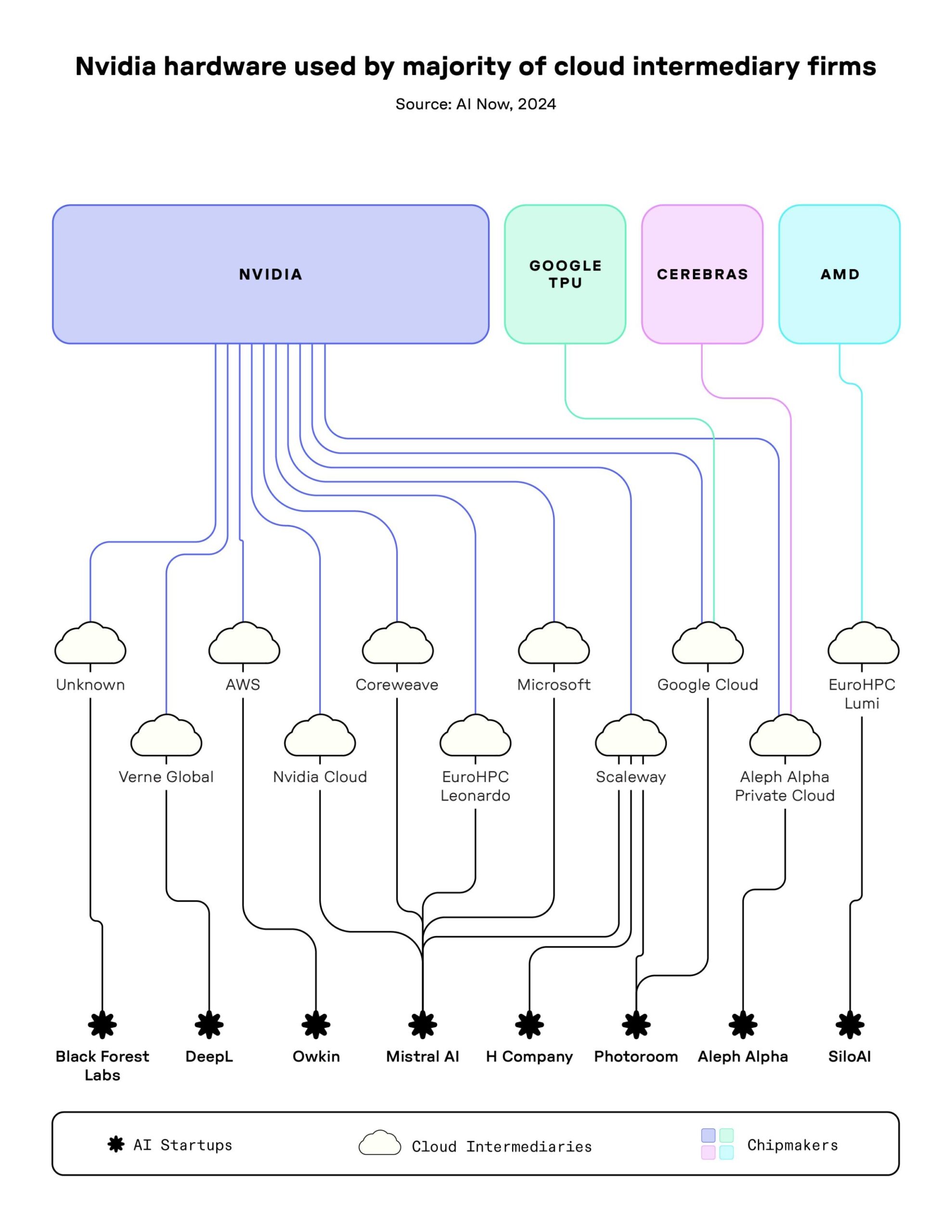

Unlike in the United States, in Europe there is some diversity in the compute ecosystem underpinning the training and inference of AI models. In our sample, we noticed that some EU companies are trying to manage their dependence on hyperscalers. Instead of leaning heavily on Microsoft, Google, and Amazon, such companies are instead opting for a mix of public and private computing resources; a multicloud strategy; and reliance on alternative, smaller compute providers known as neoclouds.29Dylan Patel and Daniel Nishball, “AI Neocloud Playbook and Anatomy,” SemiAnalysis, October 3, 2024, (<)a href='https://www.semianalysis.com/p/ai-neocloud-playbook-and-anatomy'(>)https://www.semianalysis.com/p/ai-neocloud-playbook-and-anatomy(<)/a(>). While this points to some diversity in medium-sized compute providers, on a deeper level the landscape is centralized. The majority of the computation clusters are fitted with Nvidia GPUs most efficient for large-scale training, with competitors such as AMD and Intel attempting to make a dent in this ecosystem by acquiring their own large-scale AI model providers.30AMD, “AMD Completes Acquisition of Silo AI to Accelerate Development and Deployment of AI Models on AMD Hardware,” press release, August 12, 2024, (<)a href='https://www.amd.com/en/newsroom/press-releases/2024-8-12-amd-completes-acquisition-of-silo-ai-to-accelerate.html'(>)https://www.amd.com/en/newsroom/press-releases/2024-8-12-amd-completes-acquisition-of-silo-ai-to-accelerate.html https://stability.ai/news/building-new-ai-supercomputer(<)/a(>). This points to the sustained and centralized material dependencies underpinning the current AI ecosystem.31Jai Vipra and Sarah Myers West, “Computational Power and AI: Comment Submission,” (<)em(>)Computational Power and AI(<)/em(>), June 22, 2023, AI Now Institute, (<)a href='https://ainowinstitute.org/publication/policy/computational-power-and-ai'(>)https://ainowinstitute.org/publication/policy/computational-power-and-ai(<)/a(>). The EUV lithography machines manufactured by the Dutch corporation ASML, which are required for the production of leading-edge chips, are the almost singular European lever in this material AI ecosystem. Hence, after the lessons learned from the Chips Act fiasco with Intel (see Margarida Silva and Jeroen Merk’s contribution), the available EU policy interventions to shape the material constraints of AI ecosystems seem to be largely limited to using regulation or procurement policy to kindle competition among existing, non-European hardware providers by placing relatively modest orders for the European High Performance Computing (EuroHPC) clusters.

This flicker of diversity vanishes as scale increases. As European AI companies attempt to scale to the global customer-facing market, they are inexorably pulled to the orbit of the hyperscalers. To reach a sufficient customer base for a sustainable business model and get access to the computation needed to run large-scale AI inference at scale, the path to profitability goes through Big Tech. This explains why European AI companies like Mistral form partnerships with Microsoft,32See Eric Boyd, “Microsoft and Mistral AI Announce New Partnership to Accelerate AI Innovation and Introduce Mistral Large First on Azure,” Microsoft (blog), February 26, 2024, (<)a href='https://azure.microsoft.com/en-us/blog/microsoft-and-mistral-ai-announce-new-partnership-to-accelerate-ai-innovation-and-introduce-mistral-large-first-on-azure'(>)https://azure.microsoft.com/en-us/blog/microsoft-and-mistral-ai-announce-new-partnership-to-accelerate-ai-innovation-and-introduce-mistral-large-first-on-azure(<)/a(>). or why Silo AI offers its Viking models in Google Cloud.33See “Silo AI Releases Viking on Google Cloud: A New Open Large Language Model for Nordic Languages and Code,” Silo AI (blog), last updated June 11, 2024, accessed October 12, 2024, (<)a href='https://www.silo.ai/blog/silo-ai-releases-viking-on-google-cloud-a-new-open-large-language-model-for-nordic-languages-and-code'(>)https://www.silo.ai/blog/silo-ai-releases-viking-on-google-cloud-a-new-open-large-language-model-for-nordic-languages-and-code(<)/a(>). The new model gardens offered by hyperscalers, such as Google’s Vertex AI Garden, Microsoft’s AI Azure, or Amazon Bedrock, become the primary way for developers to access the large-scale AI models manufactured by the hyperscalers themselves or produced by third-party providers. This platforming replays the logic of the previous waves of digital consolidation that turned hyperscalers into global digital champions.

The European AI market is a complex and constantly evolving space, striving to coexist within a highly concentrated large-scale AI ecosystem that heavily favors dominant digital firms, which are actively shaping the innovation trajectory of AI to serve their own interests. In this parasitic relationship, the trajectory of European AI is both indirectly and directly shaped by the logic of a highly concentrated global AI market. Taking this into account helps us understand the potential and likely trajectories of European AI ecosystems.

Key Themes

A Public-Interest Vision for AI in Europe

The EU’s AI strategy needs a coherent public-interest vision to help it move beyond the poorly defined and narrow motivations of sovereignty and competitiveness.

This must start with rigorous scrutiny of the premise that investing in AI will lead to societal and economic benefit in the first place—including the pervasive (but empirically contested) claim of productivity gains.

The promises of exponential AI-generated productivity growth are the common parlance of tech industry leaders and business-sector forecasters, whereas more careful analysis has identified significant—but more modest—productivity gains.34Daron Acemoglu, “Don’t Believe the AI Hype,” (<)em(>)Project Syndicate(<)/em(>), May 21, 2024, (<)a href='https://www.project-syndicate.org/commentary/ai-productivity-boom-forecasts-countered-by-theory-and-data-by-daron-acemoglu-2024-05'(>)https://www.project-syndicate.org/commentary/ai-productivity-boom-forecasts-countered-by-theory-and-data-by-daron-acemoglu-2024-05(<)/a(>). Indeed, the monomaniacal push for increased adoption of automation technologies has not always been a positive economic force,35Lorraine Daston, (<)em(>)Rules: A Short History of What We Live By(<)/em(>) (Princeton: Princeton University Press,2022), 1–384. leading to increased costs, increased inequality, and reduced resilience without corresponding increases in welfare.36Daron Acemoglu and Pascual Restrepo, “Tasks, Automation, and the Rise in US Wage Inequality,” (<)em(>)Econometrica(<)/em(>) 90, no. 5 (September 2022): 1973–2016, (<)a href='https://doi.org/10.3982/ECTA19815'(>)https://doi.org/10.3982/ECTA19815(<)/a(>).(<)br(>) The relationship between rapid AI adoption, investments in AI, and an increase in productivity and economic growth are anything but self-evident, especially in a context as diverse as the various national economies of the European Union, and given that most leading European AI-startups are based in Germany and France. This calls for vigorous debate.

Further complicating matters is the fact that EU discussions of AI competitiveness are mired in unclear definitions, which leads the debate astray. “Competitiveness,” regardless of how self-evident the term may seem, has repeatedly been found to collapse upon further scrutiny. “A meaningless term when applied to national economics,” and “a dangerous obsession,” is how the economist Paul Krugman described competitiveness in response to former Commission President Jacques Delors’s preoccupation with the concept in the early 1990s. While the matter is not straightforward, it is important to keep in mind that countries, or economic blocs, do not compete like players on a field; rarely is it even obvious who the players are and what the game ought to be. A similar ambiguity applies to both “sovereignty” and the term “artificial intelligence.” Calling for blanket adoption of “AI” conceals significant differences in resource requirements between large-scale AI and smaller machine learning models. “Sovereignty” is often used interchangeably with the terms state sovereignty, data sovereignty, or strategic autonomy, with unclear, and at times contradictory, implications for what sovereign technology should look like.37Johan David Michels, Christopher Millard, and Ian Walden, “On Cloud Sovereignty: Should European Policy Favour European Clouds?” Queen Mary Law Research Paper, no. 412, November 10, 2023, (<)a href='https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4619918'(>)https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4619918(<)/a(>). Similar tensions play out even around terms like digital public infrastructures, which are being driven by multiple distinct (and sometimes conflicting) institutional interests. As Zuzanna Warso warns in Chapter XII, the push for digital public infrastructure risks emphasizing fragmented digitization at the expense of prioritizing public attributes, public functions, and public ownership of digital infrastructure. If investments in digital infrastructure are driven by an aspirational desire to lead in large-scale AI development, the bet is particularly risky—as the infrastructure for training large-scale models cannot easily be repurposed for other kinds of uses.

All of these factors should give us pause to reflect on the terms currently framing the debate. We need a more critical and inclusive debate about which public, and whose interest, European industrial policy on AI is intended to serve.38Daniel Mügge, “EU AI Sovereignty: For Whom, to What End, and to Whose Benefit?” (<)em(>)Journal of European Public Policy(<)/em(>) 31, no. 8 (2024): 2200–2225, (<)a href='https://www.tandfonline.com/doi/full/10.1080/13501763.2024.2318475'(>)https://www.tandfonline.com/doi/full/10.1080/13501763.2024.2318475(<)/a(>). In her chapter sketching out a vision for the EuroStack, an increasingly popular moniker for publicly funded alternative infrastructure, Francesca Bria foregrounds the question of whose needs these infrastructures serve: “Ultimately, the EuroStack is not just a technological project—it is a political one.” The EU must critically assess who is set to benefit from AI leadership aspirations and which constituencies will have a say in shaping this vision.

Industrial Policy Should Challenge, Not Entrench, Existing Concentrations of Power in the AI Stack

At minimum, industrial policy should be designed so that it doesn’t worsen the concentrations of power in the AI stack by funneling public money to companies that already dominate the market.

Any vision for the values and goals that should drive Europe’s industrial strategy on AI needs to seriously wrestle with concentrated power and the entanglements within the AI ecosystem. Without doing so, there is a real risk that public money will end up primarily flowing toward the companies that already dominate the market, something we have already seen in past interventions like the European Chips Act, or in the early proposals in the United States to develop public computing capacity.39AI Now Institute and Data and Society Research Institute “Democratize AI? How the Proposed National AI Research Resource Falls Short,” (<)em(>)AI Industrial Policy(<)/em(>), AI Now Institute, October 5, 2021, (<)a href='https://ainowinstitute.org/publication/democratize-ai-how-the-proposed-national-ai-research-resource-falls-short'(>)https://ainowinstitute.org/publication/democratize-ai-how-the-proposed-national-ai-research-resource-falls-short(<)/a(>). Reflecting on the shortcomings of Gai-X, Europe’s attempt to build a sovereign cloud, in his chapter, Francesco Bonfiglio calls for a paradigm shift away from building national champions towards prioritizing physical infrastructure and a federated approach towards the cloud. From the failure to aggressively seek competition remedies that tackle concentrated power in the AI market, to a resignation to the dominance of and dependence on US hyperscalers and their version of what a “sovereign cloud” could look like, to calls for an emphasis on scale as beneficial for innovation and resilience,40Von Thun, “Europe Must Not Tie Its Hands in the Fight Against Corporate Power.” the Commission is not giving concentrated power in AI the attention it deserves.

One reason for this may be that the complex ways incumbents expand and abuse their power aren’t sufficiently addressed in Europe. Cecilia Rikap suggests thinking of the AI market as an “entrenched and established core of Big Tech surrounded by a turbulent periphery.” From this perspective, even a seemingly thriving EU market of startups that builds models or applications would not challenge but reinforce the high degree of concentration we see in the large-scale AI market already: being located at the core allows hyperscalers and Big Tech firms to leverage their control over data, distribution networks, and computing infrastructure to “skew the innovation trajectory and profit flows” in their direction.

In Chapter III, Cristina Caffarra argues that the conventional rules that are traditionally applied to merger enforcement in Europe will inevitably fail to capture the essence of concerns around Big Tech dealmaking with AI companies. Regulators will need to move beyond traditional narrow, post hoc antitrust analysis and deploy a fitting theory of harm to name an increasingly clear dynamic: by aggressively “weaponizing” their scaled assets into new applications, Big Tech firms and their ecosystems entrench their first-mover advantages and effectively preempt competition from challengers.

Large-Scale AI as Inconsistent with Europe’s Climate Goals

Large-scale AI’s current trajectory has serious climate impacts that might stand in irreconcilable tension with Europe’s environmental and green transition goals.

In European policy discussions, investing in AI is often framed as a necessity to achieve Europe’s climate goals, either directly by using “AI for good” to fight climate change, or indirectly by securing the prosperity Europe needs to fund the “green transition.” In Chapter IV, Fieke Jansen and Michelle Thorne show how the climate implications of large-scale AI pose an existential question for this particular technological trajectory. The production of chips and the operation of data centers are both energy-intensive and environmentally damaging and in some European countries, such as Ireland, data centers already use a fifth of the countries’ total electricity consumption.41George Kamiya and Paolo Bertoldi, (<)em(>)Energy Consumption in Data Centres and Broadband Communication Networks in the EU(<)/em(>), Publications Office of the European Union, February 16, 2024, (<)a href='https://publications.jrc.ec.europa.eu/repository/handle/JRC135926'(>)https://publications.jrc.ec.europa.eu/repository/handle/JRC135926(<)/a(>). The recent massive global investments in AI and data infrastructures are poised to exacerbate this situation even further.42Microsoft Source, “BlackRock, Global Infrastructure Partners, Microsoft and MGX Launch New AI Partnership to Invest in Data Centers and Supporting Power Infrastructure,” press release, September 17, 2024, (<)a href='https://news.microsoft.com/2024/09/17/blackrock-global-infrastructure-partners-microsoft-and-mgx-launch-new-ai-partnership-to-invest-in-data-centers-and-supporting-power-infrastructure/'(>)https://news.microsoft.com/2024/09/17/blackrock-global-infrastructure-partners-microsoft-and-mgx-launch-new-ai-partnership-to-invest-in-data-centers-and-supporting-power-infrastructure(<)/a(>).

In this context, positioning AI as a climate solution is not just misleading; it also distracts from an urgent policy priority, allowing unsustainable and inequitable systems to thrive while delaying crucial policy actions. Given the Commission’s continued (albeit weakened43WWF, “Von der Leyen Secures Second Term, Diluted European Green Deal Lives On,” July 18, 2024, (<)a href='https://www.wwf.eu/?14383941/Von-der-Leyen-secures-second-term-diluted-European-Green-Deal-lives-on'(>)https://www.wwf.eu/?14383941/Von-der-Leyen-secures-second-term-diluted-European-Green-Deal-lives-on(<)/a(>).) commitment to a green and just transition, aspiring to global leadership in AI seems counterproductive. Jansen and Thorne suggest that Europe needs to redefine innovation by placing environmental justice at the core of its industrial policy.

The sustainability question is not just of regional importance. If Europe decides to bet on large-scale AI, environmental exploitation in its supply chain, particularly of raw materials, will be felt elsewhere. As UN Trade and Development (UNCTAD) stated in their 2024 report on the digital economy, “developing countries bear the brunt of the environmental costs of digitalization while reaping fewer benefits.”44(<)em(>)2024 Digital Economy Report: Shaping an Environmentally Sustainable and Inclusive Digital Future(<)/em(>), United Nations Conference on Trade and Development, 2024, (<)a href='https://unctad.org/publication/digital-economy-report-2024'(>)https://unctad.org/publication/digital-economy-report-2024(<)/a(>).

Conditionalities to Industrial Policy are Essential to Ensure Public Benefit

Public funding or access to other public resources (including land, water, and energy) must be attached to conditions that guarantee outcomes that serve the broader public interest. This includes accountability, climate, and labor conditionalities and standards. Conditionalities must be crafted through participatory processes that involve civil society, trade unions, and affected communities, with guaranteed transparency into the implementation of conditionalities.

Europe’s newfound embrace of industrial policy is often framed in opposition to what has come before: a focus on regulation instead of, or even at the expense of, investing in alternatives. However, industrial policy never truly went away in Europe. Over the course of the Digital Decade that is about to come to an end, Europe has invested billions in flagship projects like the European sovereign cloud Gaia-X, acted as a key player in European venture capital markets, and allocated billions of euros to research and development through Horizon Europe and Digital Europe Programs.

History tells us that without emphasis on conditionalities and accountability, industrial policy will work to serve narrow industry interests at the expense of the broader public. Europe’s previous ventures into industrial policy are no exception. Both the European Chips Act and the innovation package for AI startups and SMEs show that past EU attempts to invest in AI economies and the infrastructures that underpin them have left concentrated power in the AI stack largely unaddressed, and have instead solidified the role of a few incumbents. In Chapter VI, drawing from investigations done by the Centre for Research on Multinational Corporations, Margarida Silva and Jeroen Merk show how the EU Chips Act failed to impose social, environmental, or redistributive conditions on the public subsidies granted, and excluded the public from both the negotiations and the ability to scrutinize the resulting agreements. Combined, this led to the threat of regulatory capture by well-positioned companies.

Conditionalities could also require meaningful openness in the development and release of publicly funded AI projects. But as Udbhav Tiwari argues in Chapter IX, for open source initiatives to meaningfully challenge concentrated power and the trend toward homogeneity, there will also need to be a focus on shifting broader structural conditions in the market, including via robust antitrust enforcement.

What different calls for conditionalities have in common is the involvement of a much more diverse public. In fact, this is also something Draghi calls for: strong social dialogue that fosters collaboration among trade unions, employers, and civil society, considered essential for setting goals and actions to transform Europe’s economy toward greater inclusivity and equity. Making these commitments a tangible reality is a true challenge for European tech policy in the coming years.

Industrial Policy Must Not Promote Uncritical Application of AI Into Sensitive Social Domains

Incentivizing blanket AI adoption in the public sector could contribute to a hollowing out of the state, a waste of public funds, single points of failure, and rights abuses, especially when deployed in risky contexts or in ways that are incompatible with AI’s inherent limitations.

Europe’s ambition to boost the adoption of AI in the public sector is premised on the assumption that AI will improve public services. Beyond unclear definitions—it is unclear whether “improvement” means better quality or cheaper delivery—such hopes need to be grounded in empirical evidence about the actual capabilities, benefits, and inherent limitations of AI technologies and their ability to increase the quality and efficiency of public services. This evidence is frequently lacking.45“Lessons from the FDA Model,” (<)em(>)Lessons from the FDA for AI(<)/em(>), AI Now Institute, August 1, 2024, (<)a href='https://ainowinstitute.org/publication/section-3-lessons-from-the-fda-model'(>)https://ainowinstitute.org/publication/section-3-lessons-from-the-fda-model(<)/a(>). Instead, a rich body of research, including from the European Union, has documented the risks and harms associated with using AI to cut costs in the public sector. In 2019, for instance, Philip Alston, the UN special rapporteur on extreme poverty and human rights, warned that the rapid digitization and automation of welfare systems is harming the poorest and most vulnerable people in society.46United Nations Human Rights, “World Stumbling Zombie-Like into a Digital Welfare Dystopia, Warns UN Human Rights Expert,” press release, October 17, 2024, (<)a href='https://www.ohchr.org/en/press-releases/2019/10/world-stumbling-zombie-digital-welfare-dystopia-warns-un-human-rights-expert'(>)https://www.ohchr.org/en/press-releases/2019/10/world-stumbling-zombie-digital-welfare-dystopia-warns-un-human-rights-expert(<)/a(>). The European Anti-Poverty Network has coined the term “digitally induced poverty,” a phenomenon induced through a combination of the automation of discrimination, digital exclusion, and digitization as a tool for implementing austerity.47European Anti-Poverty Network, An Exploratory Study on the Use of Digital Tools by People Experiencing Poverty, 2024, (<)a href='https://www.eapn.eu/an-exploratory-study-on-the-use-of-digital-tools-by-people-experiencing-poverty'(>)https://www.eapn.eu/an-exploratory-study-on-the-use-of-digital-tools-by-people-experiencing-poverty(<)/a(>). Amnesty International,48Amnesty International, “Trapped by Automation: Poverty and Discrimination in Serbia’s Welfare State,” December 4, 2023, (<)a href='https://www.amnesty.org/en/latest/research/2023/12/trapped-by-automation-poverty-and-discrimination-in-serbias-welfare-state'(>)https://www.amnesty.org/en/latest/research/2023/12/trapped-by-automation-poverty-and-discrimination-in-serbias-welfare-state(<)/a(>). Human Rights Watch,49Amos Toh, “Automated Neglect: How The World Bank’s Push to Allocate Cash Assistance Using Algorithms Threatens Rights”(<)em(>) (<)/em(>)Human Rights Watch, June 13, 2023, (<)a href='https://www.hrw.org/report/2023/06/13/automated-neglect/how-world-banks-push-allocate-cash-assistance-using-algorithms'(>)https://www.hrw.org/report/2023/06/13/automated-neglect/how-world-banks-push-allocate-cash-assistance-using-algorithms(<)/a(>). Algorithm Watch,50Alina Yanchur, “‘All Rise for the Honorable AI’: Algorithmic Management in Polish Electronic Courts,” Algorithm Watch, May 27, 2024, (<)a href='https://algorithmwatch.org/en/polish-electronic-courts'(>)https://algorithmwatch.org/en/polish-electronic-courts(<)/a(>). and A1151(<)a href='https://antisocijalnekarte.org/en'(>)https://antisocijalnekarte.org/en(<)/a(>). have all documented how automating the delivery of essential public services can lead to discrimination and exclusion, while shifting the burden of proof to those who are already marginalized.

One challenge that public authorities face, as MEP Kim Van Sparrentak and Simona de Heer explain in Chapter VII, involves the limitations set by the EU Procurement Directives. Public authorities currently need to choose vendors based on the lowest price, rather than considering strategic autonomy, sustainability, social standards, privacy, or the long-term governance of the end product—criteria that are crucial to ensure that taxpayer money is being spent in ways that are best for society and the economy.

Sarah Chander and Seda Gürses highlight some of the more fundamental issues that come with the blanket adoption of AI in their chapter, From Infrastructural Power to Redistribution. A punitive vision of security dominates many EU investments that fuse the concept of public safety with police, borders, and the military. Pushing for the blanket adoption of AI underestimates how expanding computational infrastructure can lead to a transformation of the economy that ultimately places the economic interests of tech companies at the heart of public and private institutions.52Agathe Balayn and Seda Gürses, “Misguided: AI Regulation Needs a Shift in Focus,” (<)em(>)Internet Policy Review(<)/em(>) 13, no. 3, September 30, 2024, (<)a href='https://policyreview.info/articles/news/misguided-ai-regulation-needs-shift/1796'(>)https://policyreview.info/articles/news/misguided-ai-regulation-needs-shift/1796(<)/a(>).

Thinking about alternatives, or even considering AI’s inherent limitations, requires a shift away from weighing risks and benefits toward asking more fundamental questions about the role that AI technologies can and should play in delivering public services, or in European society more broadly.53AI Now Institute, “AI Now Submission to the Office and Management and Budget on AI Guidelines,” December 20, 2023, (<)a href='https://ainowinstitute.org/publication/ai-now-submission-to-the-office-and-management-and-budget-on-ai-guidelines'(>)https://ainowinstitute.org/publication/ai-now-submission-to-the-office-and-management-and-budget-on-ai-guidelines(<)/a(>).

Innovation Grows with Bold Regulatory Enforcement

Rather than pit innovation against regulation, industrial policy investments should move in tandem with bold regulatory enforcement, with the goal of shaping innovation in the public interest.

The idea that tech regulation is merely a burden for European companies and harmful to European innovation is a long-standing argument advanced by US tech companies and their allies. This framing, which now also informs some of the thinking behind Europe’s nascent industrial strategy, is based on a false distinction. There are no unregulated markets—only differently regulated markets. It is a key, and unavoidable, task of policymakers to shape the patterns of economic action. Regulation can proactively shape digital economies and ensure that companies pursue innovation that strengthens, rather than undermines, core European values such as fundamental rights.

One key way to flip the script is to underscore that the lack of effective enforcement of existing data protection and competition has contributed to the kind of market concentration we see across the AI stack and the business models that sustain this concentration. Allowing the current surveillance-based business models to proliferate has prevented alternative business models from emerging. Any diagnosis of Europe’s inability to compete in this paradigm must at least partially, then, fall upon the failure to adopt a sharp enforcement posture on unchecked commercial surveillance and on the consolidation of market position across the AI stack.

This regulation-versus-innovation paradigm can also distract from the important question of what kinds of regulatory approaches and enforcement mechanisms will best be able to discipline and shape the market in the public interest. In fact, while the AI Act is routinely invoked to argue that regulation is too complex and burdensome, the AI Act is mired in loopholes and exemptions in areas concerning direct harms to human life—for instance in domains relating to police, migration control, and security actors.54#ProtectNotSurveil, “Joint statement – A Dangerous Precedent: How the EU AI Act Fails Migrants and People on the Move,” March 13, 2024, (<)a href='https://www.accessnow.org/press-release/joint-statement-ai-act-fails-migrants-and-people-on-the-move'(>)https://www.accessnow.org/press-release/joint-statement-ai-act-fails-migrants-and-people-on-the-move(<)/a(>). The risk-based product safety approach that underlies the AI Act is not sufficient and sometimes even counterproductive when it comes to protecting people from fundamental rights violations and harm in many sensitive contexts.55European Center for Not-for-Profit Law, “ECNL, Liberties and European Civic Forum Put Forth an Analysis of the AI Act from the Rule of Law and Civic Space Perspectives,” April 3, 2024, (<)a href='https://ecnl.org/news/packed-loopholes-why-ai-act-fails-protect-civic-space-and-rule-law'(>)https://ecnl.org/news/packed-loopholes-why-ai-act-fails-protect-civic-space-and-rule-law(<)/a(>).56European Disability Forum, “EU’s AI Act Fails to Set Gold Standard for Human Rights,” April 3, 2024, (<)a href='https://www.edf-feph.org/publications/eus-ai-act-fails-to-set-gold-standard-for-human-rights'(>)https://www.edf-feph.org/publications/eus-ai-act-fails-to-set-gold-standard-for-human-rights(<)/a(>).

Europe’s Place in the World

In what is perceived as an existential race for geopolitical influence and competitiveness vis-à-vis the US and China, and amid widespread fears of Europe’s subordination, Europe must not lose sight of the many ways in which its policy orientation will shape the landscape of possibility, not just for the EU but also for the rest of the world.

Digital trade policy, and in particular requirements for maintaining low trade barriers for digital goods and services, often implying minimal or no regulation or conditionalities, can significantly shape the field of possibility when it comes to industrial policy. As Burcu Kilic argues in Chapter VIII, the EU’s digital trade policy has long taken a neoliberal approach; Draghi’s recommendations focusing on keeping low trade barriers to ensure continued access to the latest AI models and processors in the US is no exception. Primarily benefiting Big Tech, the EU’s neoliberal approach to trade has promoted tech-driven globalization while remaining largely disconnected from broader EU domestic policies and priorities (with the exception of privacy). In this paradigm, many industrial policy measures to shape the trajectory of tech development could be treated as trade barriers, preventing not just Europe, but also other regions and nations that Europe trades with, from adopting an ambitious industrial policy strategy that prioritizes people and the planet. A neoliberal trade agenda, Kilic argues, is simply incompatible with industrial policy.

In her chapter, Francesca Bria urges Europe not to be purely inward-looking in its industrial policy orientation, even if it is motivated primarily by the desire for sovereignty, pointing to the possibilities for more global alliances motivated by challenging the current concentrations of power. While “the Brussels effect,”57Anu Bradford, The Brussels Effect: How the European Union Rules the World (New York: Oxford University Press, 2020), (<)a href='https://doi.org/10.1093/oso/9780190088583.001.0001'(>)https://doi.org/10.1093/oso/9780190088583.001.0001(<)/a(>). a process of unilateral regulatory globalization caused by the European Union, is ultimately unidirectional and has not always been positively received by the countries that are nudged to comply with European laws, Bria hopes that through a stronger emphasis on building alternatives, Europe can become a collaborator in sharing a fairer digital future.

Conclusion

This collection features a broad spectrum of ideas for what a public-interest vision of AI beyond competitiveness and sovereignty could look like. These critical interventions provoke a rethinking of the fundamental ideas underpinning European tech and innovation policy. They offer different approaches to the values and norms that should underpin European industrial policy on AI, and the economic and societal outcomes that such interventions should ultimately seek to create.

While authors differ in their stances, backgrounds, and political positioning on these issues, they are united in showing that past tools and approaches are not fit for purpose. Neither incremental change, nor significant investments into a predefined innovation trajectory, will benefit the public interest. Instead, European tech and innovation policy needs a vision and a radical rethink.

To engage in a radical reset, Europe must grapple with no less than existential questions about the direction and nature of its digital future. Answering these questions requires abandoning comfortable, established speaking points, superficial analyses, and bland statements that stand in for a serious discussion of what technology politics could be:

- What kind of (digital) future does Europe want?

- What role can, and should, AI technologies play in this future?

- Who will have a say in determining the path?

These are the challenging questions we will start to address in this report and will continue to grapple with in the coming years.

Research Areas